(Beta) Create prompt development workflow

This is a beta feature available to customers using a Snorkel-hosted instance of Snorkel Flow. Beta features may have known gaps or bugs, but are functional workflows and eligible for Snorkel Support. To access beta features, contact Snorkel Support to enable the feature flag for your Snorkel-hosted instance.

Prompt development, sometimes called prompt engineering, is the process of designing and refining inputs to guide AI models, such as large language models (LLMs), to produce high-quality, task-specific outputs. A prompt development workflow begins with uploading a text dataset, selecting an LLM, and crafting system and/or user prompts to tailor the model's response. With prompt versioning, users can iterate on their designs, enabling continuous improvement of AI-driven results.

Prerequisite

Enable required models via the Foundation Model Suite to set up and manage external models. Learn more about using external models.

Create a prompt development workflow

Upload input dataset

- Navigate to the Datasets page.

- Select Upload new dataset.

- Select the train split when uploading.

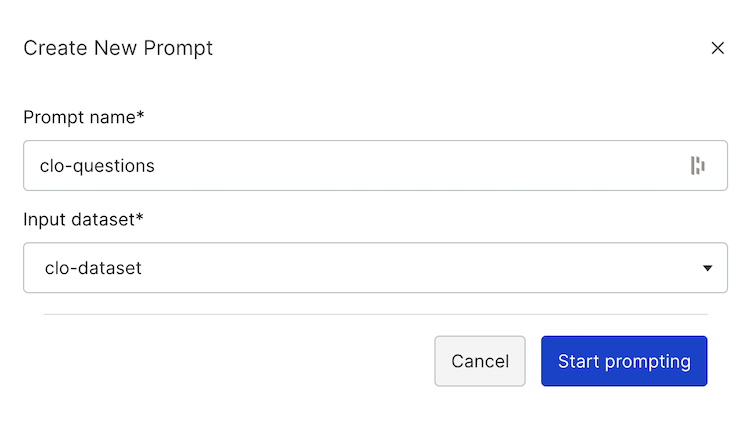

Create a prompt workflow

-

Go to the Prompts page.

-

Select Create Prompt.

-

Name your workflow.

-

Associate it with an input dataset.

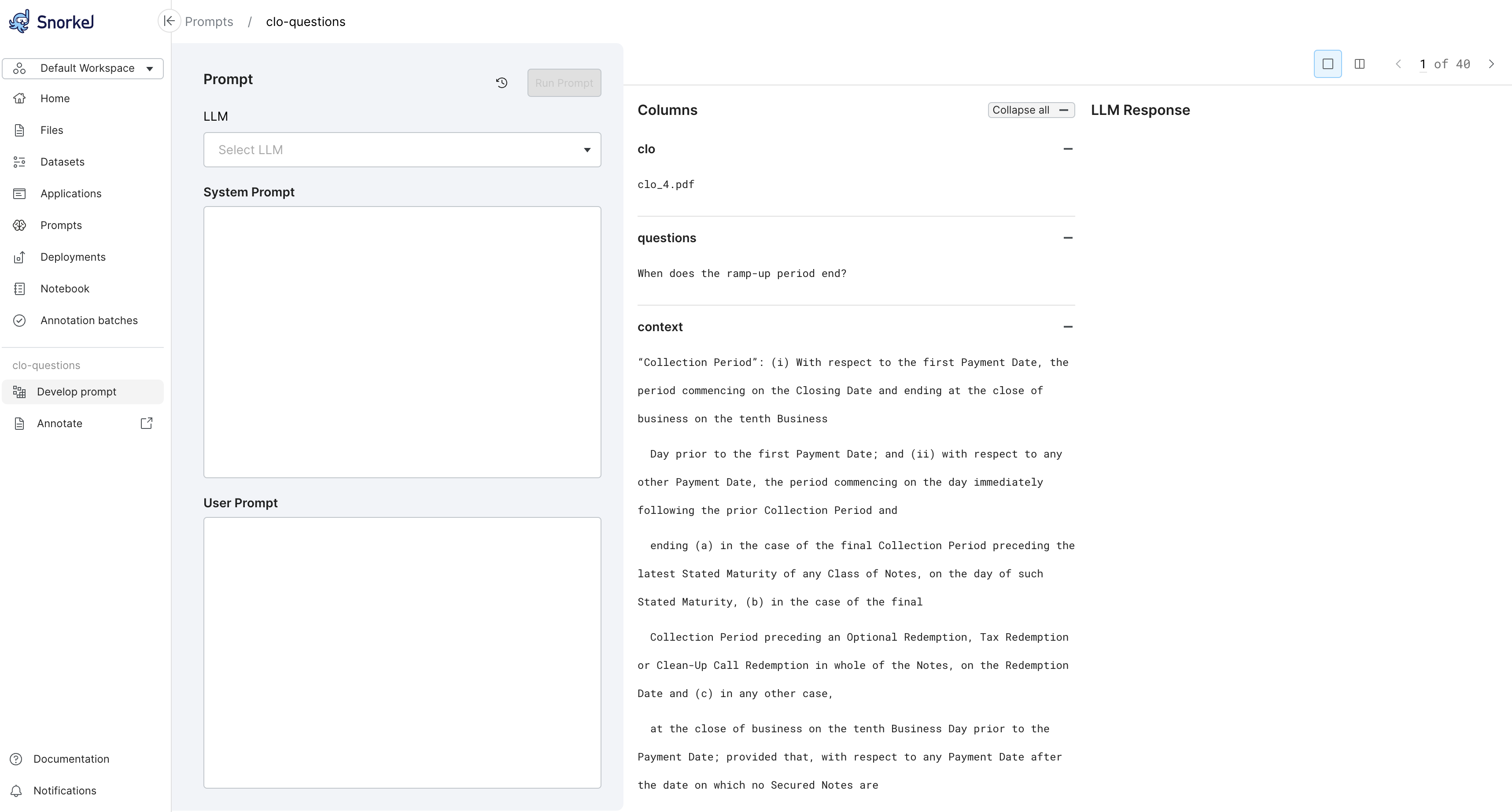

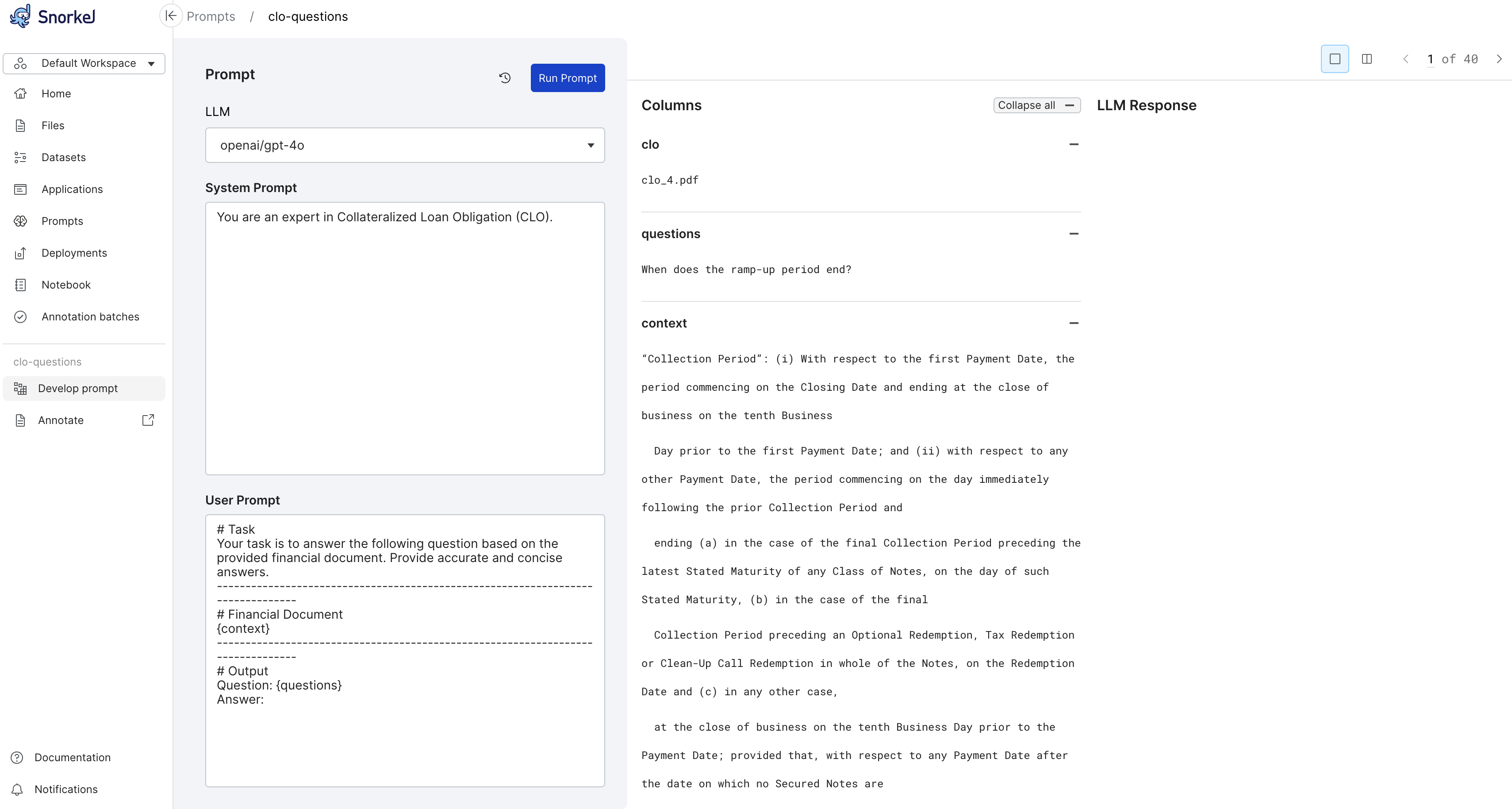

You will see the initial prompt workflow page:

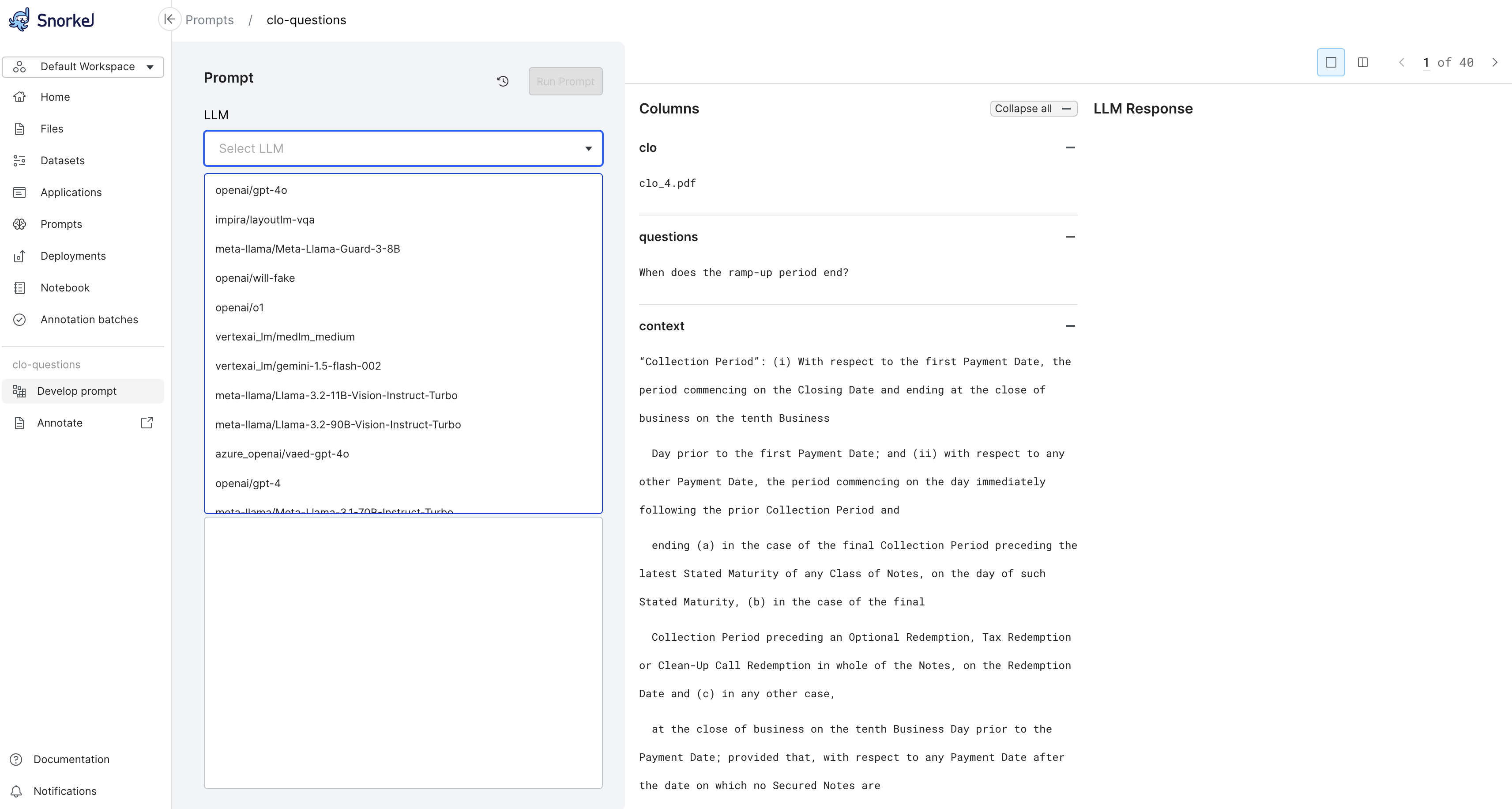

Select model

- Choose an LLM from the dropdown menu.

- Switch models as needed to optimize results.

- If required, enable additional models via the Foundation Model Suite.

Enter and run prompts

- Configure prompts, using system prompts, user prompts, or both as needed.

For more about prompts, see Prompt development overview.

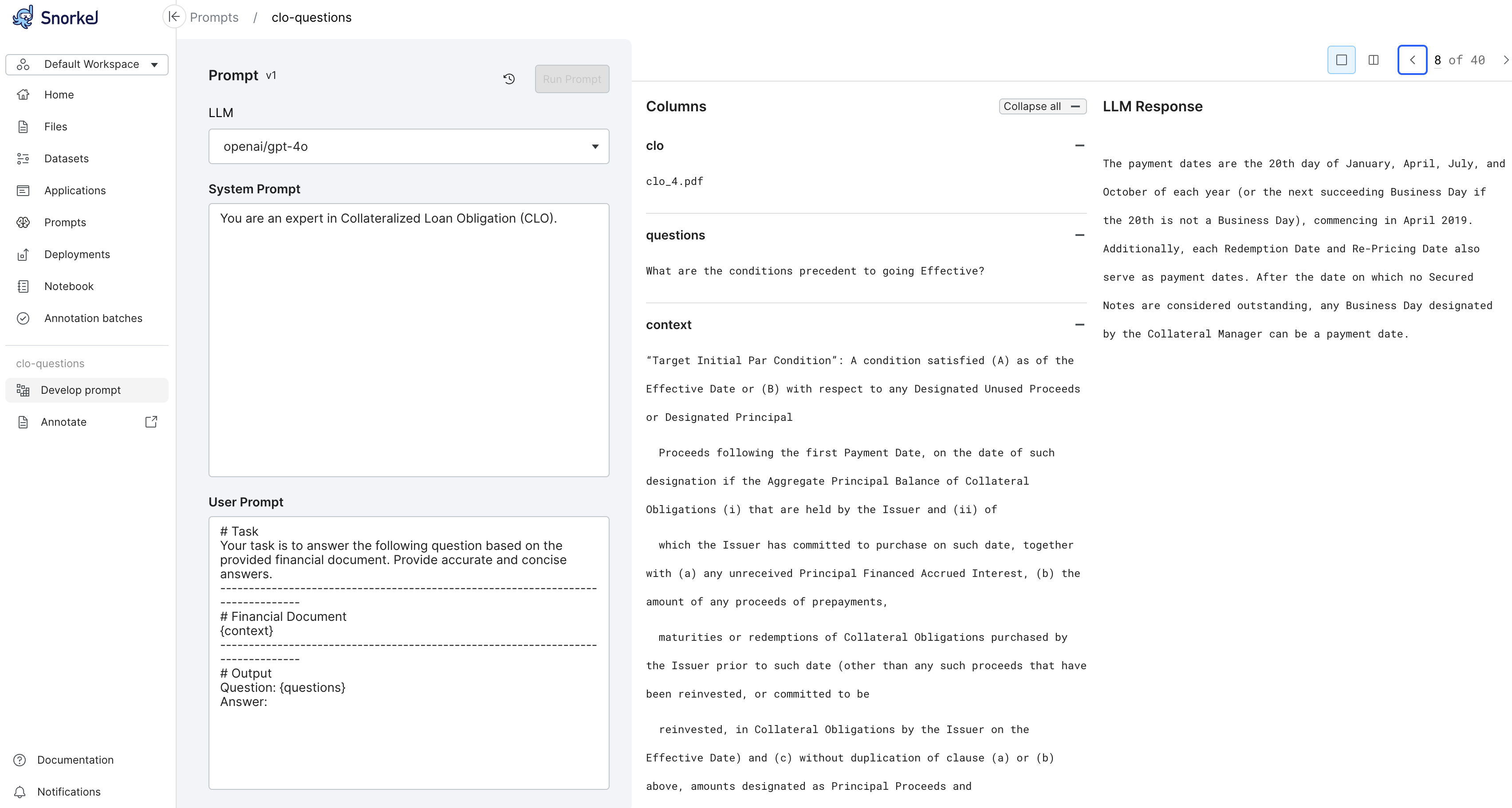

- Execute the workflow and review responses for each input.

- To improve responses, iterate your prompts or LLM settings and re-run workflows.

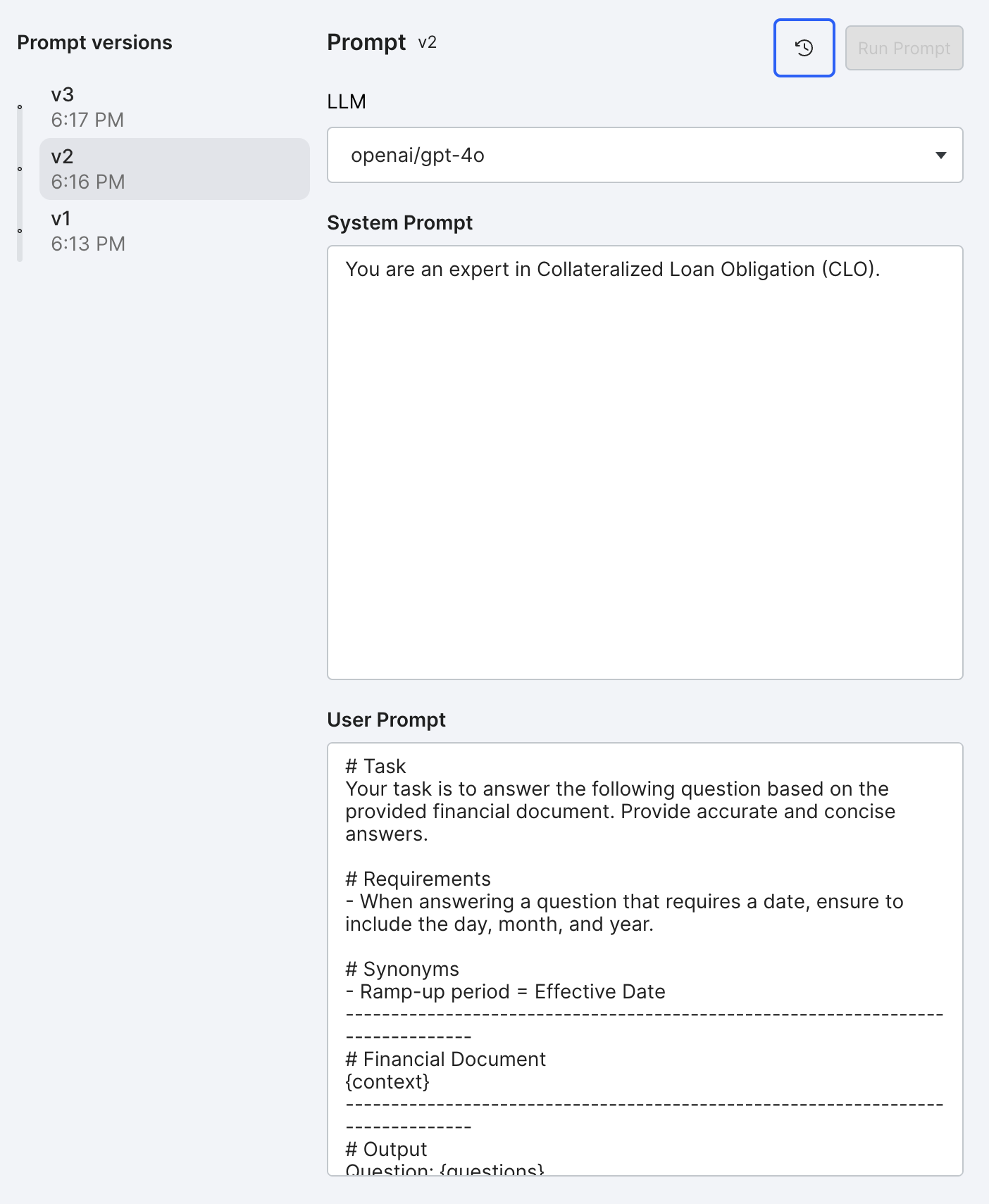

Manage prompt versions

- Use the versioning feature to compare prompts and responses over time.