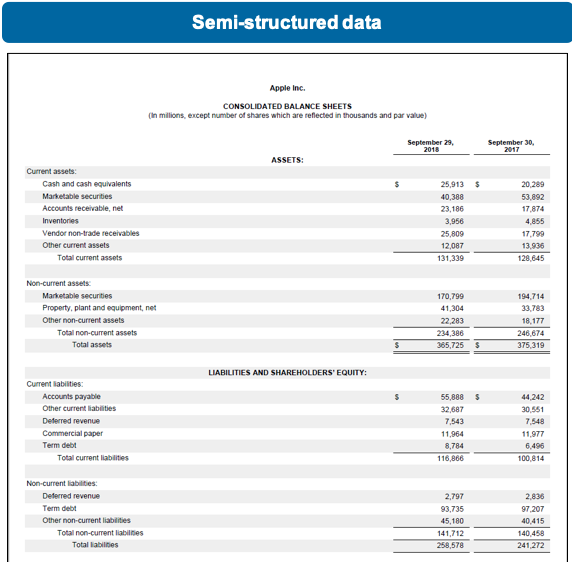

Extraction from PDFs: Extracting balance sheet amounts

In this tutorial, you will use Snorkel Flow to extract balance sheet amounts from the SEC filings of public companies.

Snorkel Flow supports the extraction of structured information from semi-structured documents such as PDFs, HTML, and docx files. Common use cases extract information from financial filings, insurance claims, and medical reports. These documents are converted to a special format called "Rich Doc" in the platform. This conversion allows you to leverage text, layout, and image modalities to write labeling functions (LFs) and train models.

In this tutorial, you will learn how to:

- Upload data into Snorkel Flow

- Create an application

- Add ground truth

- Define labeling functions

- Train a model

Upload data

Snorkel Flow can ingest data from a range of storage options, including cloud storage, databases, or local files. For PDF extraction applications, the input data must include the following fields:

| Field name | Data type | Description |

|---|---|---|

uid | int | A unique id mapped to each row. This is standard across all Snorkel Flow datasources. |

url | str | The file path of the original PDF. This will be used to access and process the PDF. |

For this tutorial, use the provided dataset from in AWS S3 Cloud Storage. Start by creating your new dataset.

-

Select the Datasets option from the left menu.

-

Select the + Upload new dataset button on the top left corner of your screen.

-

In the New Dataset window, enter the following information:

-

Dataset name: Enter

balance-sheets-dataset. -

Select a data source: Select Cloud Storage as your data source.

-

File path and Split: Add these datasets:

File path Split s3://snorkel-native-pdf-sample-dataset/splits/train.csvtrain s3://snorkel-native-pdf-sample-dataset/splits/valid.csvvalid s3://snorkel-native-pdf-sample-dataset/splits/test.csvtest

-

-

Select Verify data source(s) to run data quality checks to ensure data is cleaned and ready to upload.

-

After clicking Verify data source(s), select

uidfrom the UID column dropdown box. Theuidis the unique entry ID column in the data sources. -

Select Add data source(s).

Once the data is ingested from all three data sources, you can create an application with your PDF extraction dataset. For more information about uploading data, see Data upload.

Create an application

There are two categories of PDF documents:

- Native PDFs are documents that were created digitally. These PDFs can be parsed without additional processing.

- Scanned PDFs are created from scans of printed documents. These PDFs don't have the metadata that we need to parse layout information. Scanned PDFs require additional preprocessing with an optical character recognition (OCR) library.

The application creation process is slightly different for these two formats. This tutorial uses native PDFs.

You will extract the numbers from the Consolidated Balance Sheets provided in public company filings, and classify these numbers into the following classes: ASSETS, LIABILITIES and EQUITY.

-

Select the Applications option in the left-side menu and select Create application to create a new application. Enter the values provided in the table:

Stage Field Value Start Application name line-item-classificationData Dataset balance-sheets-datasetLabel schema Data type PDF Task type Extraction PDF type Native PDF PDF URL field urlYou selected the dataset created in the previous step, defined the data and task type, and specified where the PDFs can be found (

url). -

Select Generate Preview to generate and display a preview sample on the right from the input documents.

-

Edit the Label schema table:

-

- Select Add new label and add

ASSETS. Next, addLIABILITIESandEQUITYto the table as new labels. These are the entities to extract. - Edit the

NEGATIVElabel and rename it toOTHER. Any data point that does not fall into one of the positive classes will be labeled asOTHER.

Do not edit theUNKNOWNlabel. This is used for unlabelled data. - Select Next.

- Select Add new label and add

-

In the Preprocessors pane, select the following preprocessing operations to perform on the dataset:

- Split docs into pages: Yes

- Page split method: PageSplitter

- PageSplitter window_size: 0

- Extraction method: NumericSpanExtractor from an existing extractor

- NumericSpanExtractor field: rich_doc_text

-

Select Commit for the

PageSplitterand theNumericSpanExtractoroperators.

Operators perform transformations on your input data. You can add other operators in the background to pre-compute useful features to help define labeling functions. For more information on these operators, see PDF-based operators. ThePageSplitterand theNumericSpanExtractortransformations are visualized in the Preview sample pane on the right side of your screen.- The

PageSplitteroperator splits the documents into pages. This split helps us decrease memory usage, retrieve metadata more efficiently, and improve latency. - The

NumericSpanExtractoroperator is used to extract all numeric values as candidate spans from the raw text. The Preview sample pane highlights the numeric values.

- The

-

Click Next to start a job that runs the preprocessing operators on the entire dataset.

-

In Development Settings, select Yes using

span_textto Compute embeddings. -

Select Go To Studio.

To set up a Scanned PDF application, you would select the Scanned PDF, no need to run OCR option for PDF type. For more information about preprocessing scanned PDFs, see Scanned PDF Guide.

Add ground truth

Snorkel Flow allows you to label your data with programmatic labels. First, you must establish ground truth with annotations to validate the performance. When you start a new project, you can annotate your data using our Annotation Studio. For this tutorial, you will annotated data.

- Select Overview in the left-hand menu.

- Select View Data Sources to view the data sources that we have added. In this view, you can see the total number of data points that are extracted for each data source.

- Select Upload GTs.

- Enter the following values:

- File path:

s3://snorkel-native-pdf-sample-dataset/native_pdf_ground_truth.csv - File format:

CSV - Label column:

label - UID column:

x_uid

- File path:

- Select Add.

- Refresh the page after uploading the ground truth.

The# of GT labelscolumn has non-zero values. - Click Go To Studio to return to the Studio page.

Review data in Develop (Studio)

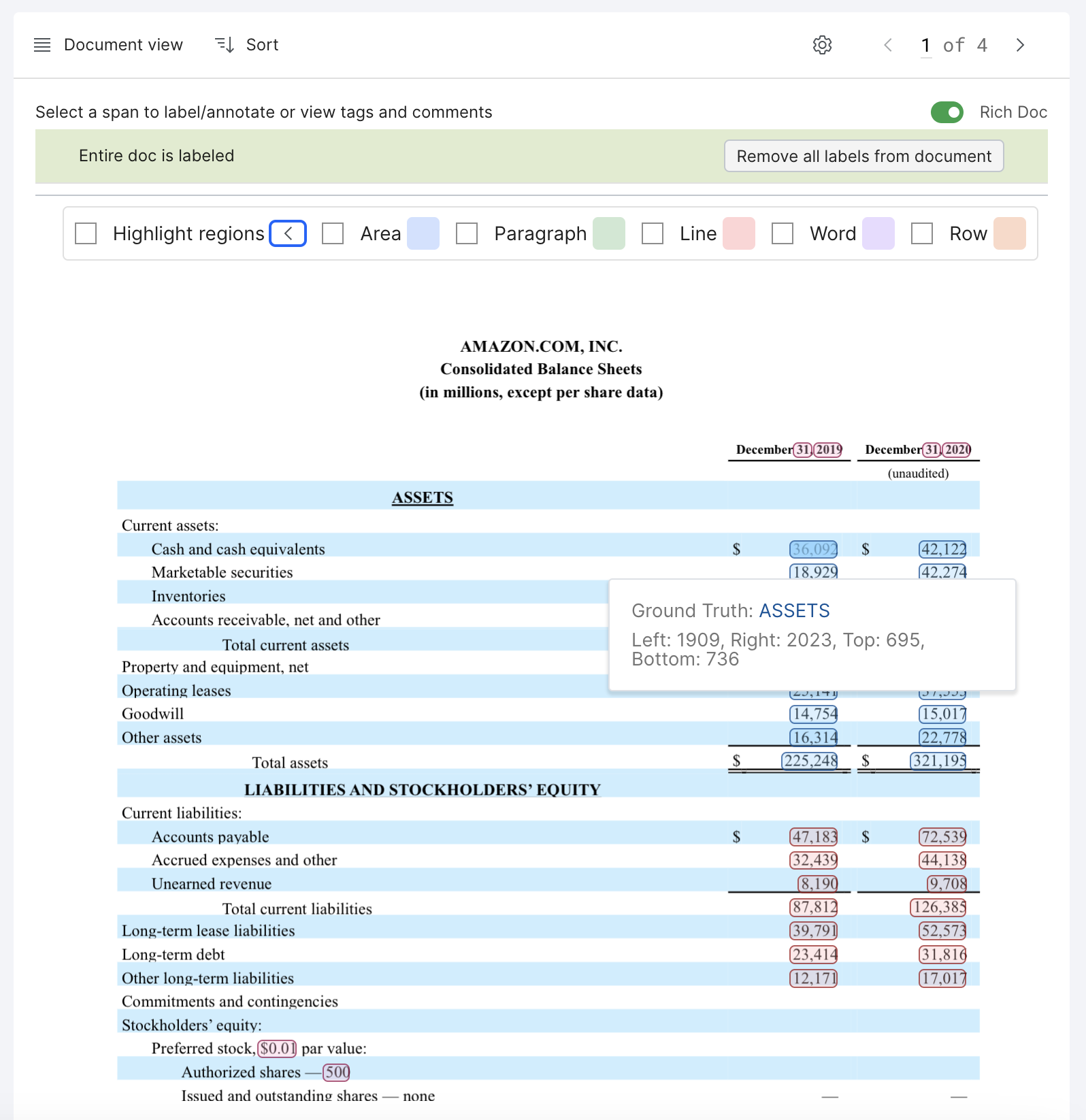

Snorkel Flow extracts candidate spans from the raw text of the document using a heuristic. Common span types include numbers, dates, and email addresses. You can define labeling functions to assign labels to the candidate spans in the document. The labels are aggregated and used to train a classification model. The spans are highlighted with bounding boxes and color-coded by label.

- Use your cursor to hover over any word in the document. You can see the bounding box coordinates of the word as measured in pixels.

- Select Rich Doc toggle to see the raw text extracted from the document.

- Select the arrow to expand Highlight regions. This allows you to highlight the bounding boxes of the different regions in the document: row, word, line, paragraph, and area.

Develop (Studio) shows thedevsplit. Thedevsplit is a sample of the train split used for iterating on labeling functions. - Select the Dev split dropdown in the top toolbar.

- Select Resample data.

- Set the Sample size to 4, and select Resample dev split.

Next, you'll define your labeling functions.

Define labeling functions

A labeling function (LF) is a programmatic rule or heuristic that assigns labels to unlabeled data. LFs are the key data programming abstraction in Snorkel Flow. Each labelling function votes on whether a data point has a particular class label. The Snorkel Flow label model is responsible for estimating LF accuracies and aggregating them into training labels.

For PDF extraction applications, we add a few text fields that are useful for defining LFs using the RichDocSpanRowFeaturesPreprocessor operator. These fields are based on heuristics as defined below:

| Field | Description |

|---|---|

rich_doc_row_text_inline | text in the same row as the span |

rich_doc_row_header | text that is the furthest to the left of the span |

rich_doc_inferred_row_headers | text that is above the span and indented to the left |

rich_doc_row_text_before | text in the row before the span |

rich_doc_row_text_after | text in the row after the span |

You can use these columns to define text-based LFs. You can also use the word bounding boxes (top, left, bottom and right) to define location-based LFs.

- Create new labeling functions.

- In Patterns, select your LF template by typing

/, followed by the pattern, such asKeyword,Regex, orNumeric. - Enter the requirements for the labeling function.

- Select a label from the dropdown menu.

- Select Preview LF to see the precision and coverage of your LF.

- Select Create LF.

The new LF shows up in the Labeling Functions pane.

- In Patterns, select your LF template by typing

Using the steps above, add these LFs to your application:

| LF Template | Settings | Label | Explanation |

|---|---|---|---|

| Keyword | rich_doc_inferred_row_headers [CONTAINS] Assets, Cash, Land | ASSETS | The LF checks if the inferred row header of the span contains the keywords Assets, Cash or Land. |

| Keyword | rich_doc_row_text_inline [CONTAINS] Liabilities, Payable, Debt | LIABILITIES | The LF checks if the text in the same as the span contain the keywords Liabilities, Payable or Debt. |

| Regex | rich_doc_row_header matches pattern Total.{1,15}equity | EQUITY | The LF checks if the row header of the span matches the regex pattern. |

| Numeric | right [<=] 1200 | OTHER | The LF checks if the span's right boundary is less than or equal to 1200 pixels i.e. if the span is on left side of the page. |

Some LF templates are available for only PDF extraction applications. These LFs allow us to combine the text and layout information to define LFs.

Using the steps above, add these LFs to your application:

| LF Template | Settings | Label | Explanation |

|---|---|---|---|

| Span Regex Proximity Builder | If the span is up to [6] [LINE(s)] [AFTER] the regex pattern current assets: | ASSETS | This LF specifies that spans up to 6 lines after the expression “current assets:” will be labeled ASSETS. |

| Span Regex Row Builder | If the span is [0] rows before and [5] rows after the regex pattern current liabilities: | LIABILITIES | This LF specifies that spans up to 5 rows after the expression “current liabilities:” will be labeled LIABILITIES. |

| Rich Doc Expression Builder | Evaluate Common stock with SPAN.left > PATTERN1.right and SPAN.top >= PATTERN1.top | EQUITY | This LF specifies that spans to the right and below the expression “Common stock” will be labeled as EQUITY. |

For more information on Rich Doc builders, see Rich document LF builders.

You can also encode your custom labeling function logic using the Python SDK. For examples using the RichDocWrapper object, refer to the SDK reference.

Train a model

You can aggregate the newly defined labeling functions to train a model.

- In the Models pane, select Train a model.

- For the Model architecture, select Logistic Regression (TFIDF).

- To include the text fields used to define labeling functions, select Input Fields and select

rich_doc_inferred_row_headers,rich_doc_row_text_inline,rich_doc_row_header, andrightfrom the dropdown. - Leave the default settings in Train options.

- Select Train custom Model to generate labels using the LFs and train a logistic regression model using the labels.

Once the model training is completed, you will see your first model in the Models pane.

Snorkel Flow offers default configurations for several commonly used models. For more information on models, see Model training.

Snorkel Flow enables users to iteratively generate better labels and better end models. For information about getting metrics and improving model performance, see Analysis: Rinse and repeat.

Conclusion

You have set up and iterated on a PDF extraction application in Snorkel Flow. To learning more about PDF extraction features in Snorkel, see this documentation:

- Learn how to work with scanned documents with our OCR guide.

- Read more about PDF-based Operators.

- Check out more examples of Rich Document Builders.

The Snorkel Flow Python SDK provides greater flexibility to define custom operations. Select Notebook on the left-side pane to access the SDK. See the SDK reference to learn more about custom operators and custom labeling functions.