Onboarding and defining the GenAI evaluation artifacts

Evaluation for GenAI output begins with onboarding, where you define the key elements of the benchmark. This includes identifying the evaluation criteria, defining data slices, gathering reference prompts, and writing evaluators.

To follow along with an example of how to use Snorkel's evaluation framework, see the Evaluate GenAI output tutorial.

Criteria

These are the elements that the model's output will be evaluated against:

- Correctness: Is the output factually accurate?

- Compliance: Does the output meet regulatory and policy requirements?

- Robustness: Is the model resistant to adversarial attacks?

- Fairness: Does the model avoid harmful biases?

To add a new criteria:

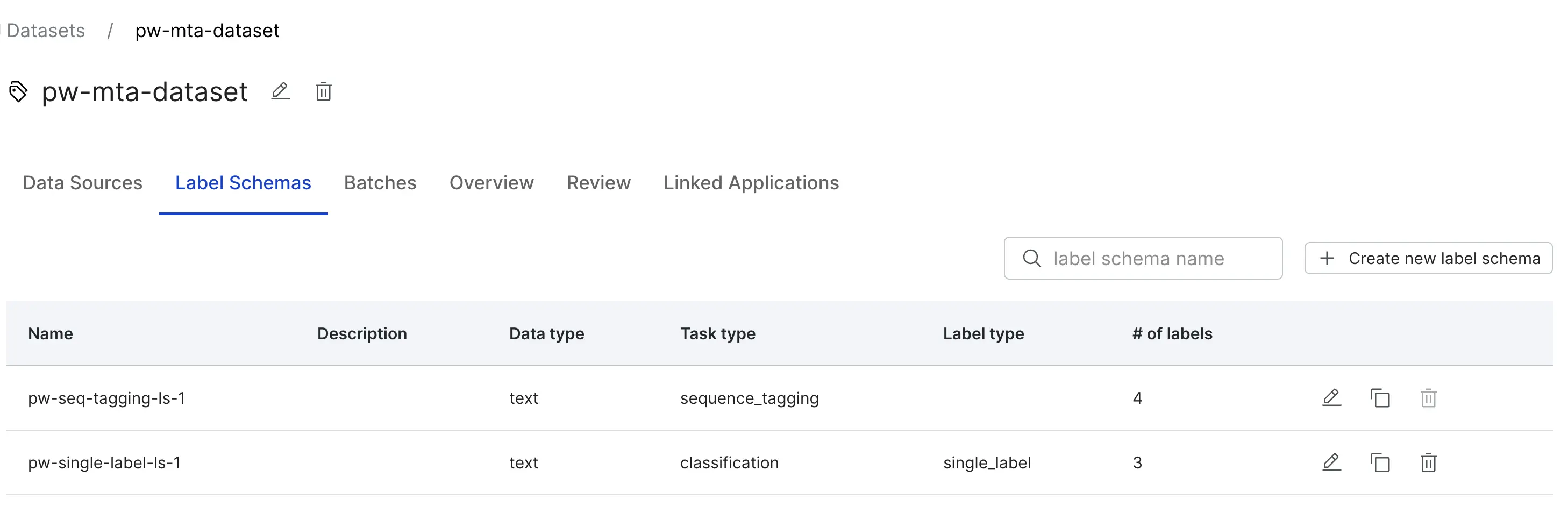

- Select the Datasets page.

- Select your dataset.

- Select the Label Schemas tab.

- Select the Create new label schema button on the top right.

- Fill out the forms in the dialog according to your criteria.

Data slices

Data slices represent specific segments of the dataset that are particularly important for business objectives. Data slices could be based on:

- Topic (e.g., administrative queries vs. dispute queries).

- Language (e.g., responses in Spanish).

- Custom slices relevant to enterprise-specific use cases.

Reference prompts

A set of prompts that the model will be tested against. The prompts should be representative of all the types of questions you want your model to perform well on. These can be collected, curated, authored, and/or generated. Prompts can include questions that were:

- Mined from historical data (user query streams from real questions asked to a chatbot, historical query logs from questions asked to human agents, etc).

- Provided by Subject Matter Experts (SMEs).

- Synthetically generated.

Each time you iterate on your fine-tuned model, the responses to the prompts will change, but the prompts themselves will remain consistent across iterations.

Evaluators

These are functions for assessing if an LLM's response satisfies your defined criteria. Each evaluator takes in a datapoint and returns true or false. They can include:

- A prompted LLM serving as a judge, commonly referred to as LLM-as-a-judge.

- Automated comparison to an SME-annotated "gold" dataset (e.g. embedding or LLM similarity score between new LLM and gold SME responses).

- An off-the-shelf classifier for checking PII, toxicity, or specific compliance criteria.

- A heuristic rule.

- A custom predictive model built with programmatic supervision.

(Optional) Ground truth

To get started quickly, you can complete onboarding without gathering ground truth labels. This allows you to more quickly generate your first benchmark evaluation before involving subject matter experts (SMEs) in annotation. When you run the benchmark first, you have a better idea of how and where to use your SME time impactfully. In the refinement step, we explain the best way to gather ground truth labels to aid in your evaluation workflow. However, if you already have ground truth labels, you can also include them in this onboarding stage, which will result in more signal in your first evaluation benchmark.

Next steps

Once you have defined your criteria, data slices, reference prompts, and evaluators, you can run your first evaluation. See running your first evaluation for more information.