Class level metrics

Class level metrics displays model performance on per-class basis to help decide where to best focus efforts.

This topic explains the class level metrics available in Snorkel Flow:

Span precision

Span precision evaluates models in tasks like named entity recognition (NER) and other sequence tagging tasks that involve identifying and labeling multi-token entities (spans). It measures how accurately the model identifies the spans of text that correspond to specific entities or labels.

To calculate span precision, consider True Positive and False Positive:

-

True Positive (TP): A span that the model correctly identifies and labels as an entity, matching both the entity type and exact boundary.

-

False Positive (FP): A span that the model identifies and labels as an entity, but is either incorrect in type or boundary or does not match any true entity in the data.

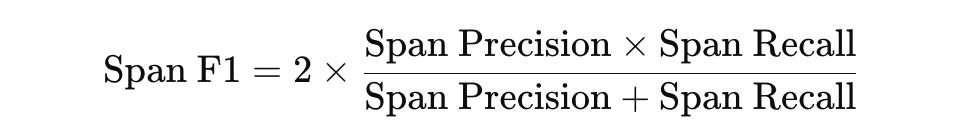

This is the formula for span precision:

A high span precision indicates that most of the spans the model identifies as entities are accurate, meaning they correctly match the entities' boundaries and types as defined in the labeled data. However, span precision alone does not account for whether the model missed any actual spans, which is why it's often used in conjunction with span recall and span F1 score for a more complete evaluation.

Span recall

Span recall evaluates how well a model identifies all relevant spans in sequence tagging tasks, such as NER. Specifically, it measures the model's ability to correctly identify all instances of multi-token entities (spans) within the text.

To calculate span recall, consider True Positive and False Positive:

-

True Positive (TP): A span that the model correctly identifies and labels, matching both the type and the exact boundaries of the true entity.

-

False Negative (FN): A span that exists in the true data, but is missed by the model, either because the model didn’t identify it at all or didn’t match the entity’s boundaries or type correctly.

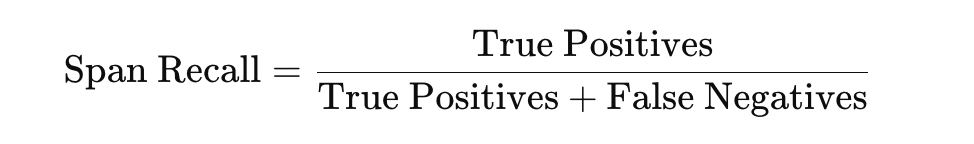

This is the formula for span recall:

A high span recall indicates that the model successfully identifies most or all relevant spans within the dataset, meaning it does not miss many entities. Like precision, span recall is most informative when used alongside span precision and the span F1 score, as this provides a balanced view of the model's ability to find and accurately label all entities.

Span F1

Span F1 is a metric that combines span precision and span recall to provide a single measure of a model's performance in identifying and labeling multi-token entities (spans) in tasks like NER. It is particularly useful because it balances the trade-off between span precision and span recall, making it an effective metric when both false positives and false negatives are of concern.

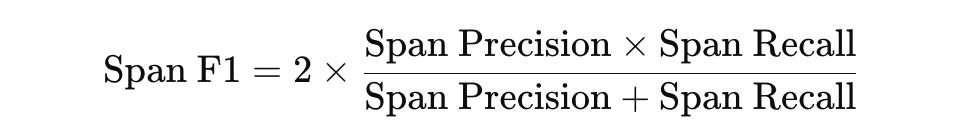

The Span F1 score is calculated as the harmonic mean of span precision and span recall:

A Span F1 score ranges from 0 to 1:

- A score of 0 indicates that the model failed to correctly identify any spans.

- A score of 1 indicates perfect precision and recall, meaning the model correctly identifies all spans without any errors or omissions.

The Span F1 score is especially valuable in scenarios where it is important to accurately identify spans (precision) and ensure that no spans are missed (recall), as it reflects the model's overall effectiveness in capturing the correct entities.

Token precision

Token precision evaluates the accuracy of a model in sequence tagging tasks, such as NER, where each individual token (e.g., a word or punctuation mark) in a sequence is labeled. Token precision measures how accurately the model labels tokens as belonging to a specific category.

To calculate token precision, consider True Positive and False Positive:

- True Positive (TP): A token that the model correctly labels as belonging to the target category.

- False Positive (FP): A token that the model incorrectly labels as belonging to the target category when it does not.

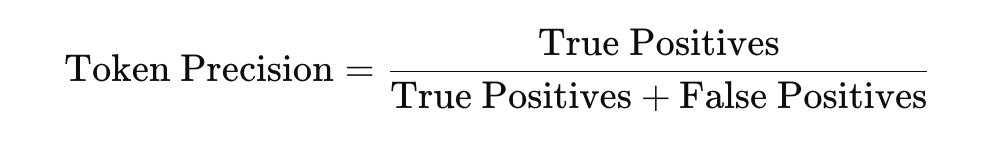

This is the formula for token precision:

A high token precision indicates that most of the tokens labeled as positive by the model are indeed correct, meaning there are few false positives. However, token precision alone does not account for missed tokens (false negatives), which is why it’s often reported along with token recall and the token F1 score to provide a balanced view of the model's performance.

Token recall

Token recall evaluates how well a model correctly identifies all relevant tokens that belong to a specific category. It measures the proportion of actual positive tokens that the model correctly labels as positive.

To calculate token recall, consider True Positive and False Positive:

- True Positive (TP): A token that the model correctly labels as belonging to the target category.

- False Negative (FN): A token that belongs to the target category but the model either misses or incorrectly labels.

This is the formula for token recall:

A high token recall indicates that the model is successfully identifying most or all of the relevant tokens for the target category, meaning it has few false negatives. However, token recall alone does not provide information on how accurately it labels those tokens, meaning it doesn't account for false positives. For a more comprehensive evaluation, use token recall alongside token precision and the token F1 score.

Token F1

Token F1 is a metric that combines token precision and token recall to provide a single measure of a model's performance in sequence tagging tasks, such as NER. It balances the trade-off between precision (how many labeled tokens are correct) and recall (how many relevant tokens are found) to give a holistic view of the model's accuracy in identifying and labeling individual tokens.

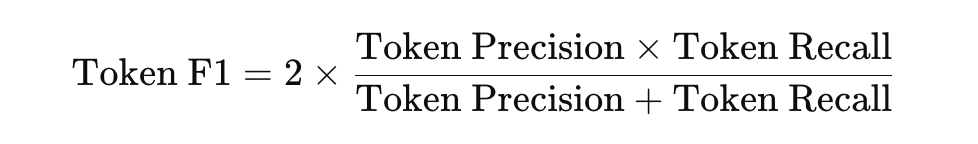

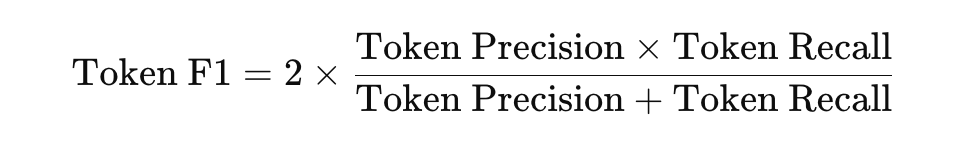

The Token F1 score is the harmonic mean of token precision and token recall:

The Token F1 score ranges from 0 to 1:

- A score of 0 indicates the worst possible performance, meaning the model failed in both precision and recall.

- A score of 1 indicates perfect precision and recall, meaning the model has correctly identified and labeled all relevant tokens without any errors.

The Token F1 score is especially useful when both false positives and false negatives are important, as it provides a balanced measure of the model's overall ability to accurately identify and label individual tokens in the dataset.