Manage RBACs for dataset connectors

This page walks through the process of controlling access to dataset upload connectors:

- Disable a data connector type (e.g., Local File Upload) for the entire Snorkel Flow instance.

- Share data connector credentials securely in-platform within the same workspace.

- Restrict data connector type usage based on user role and/or workspace.

Prerequisites

To enable dataset access controls, we require the following:

- An existing Snorkel Flow deployment.

- An account with Superadmin permissions.

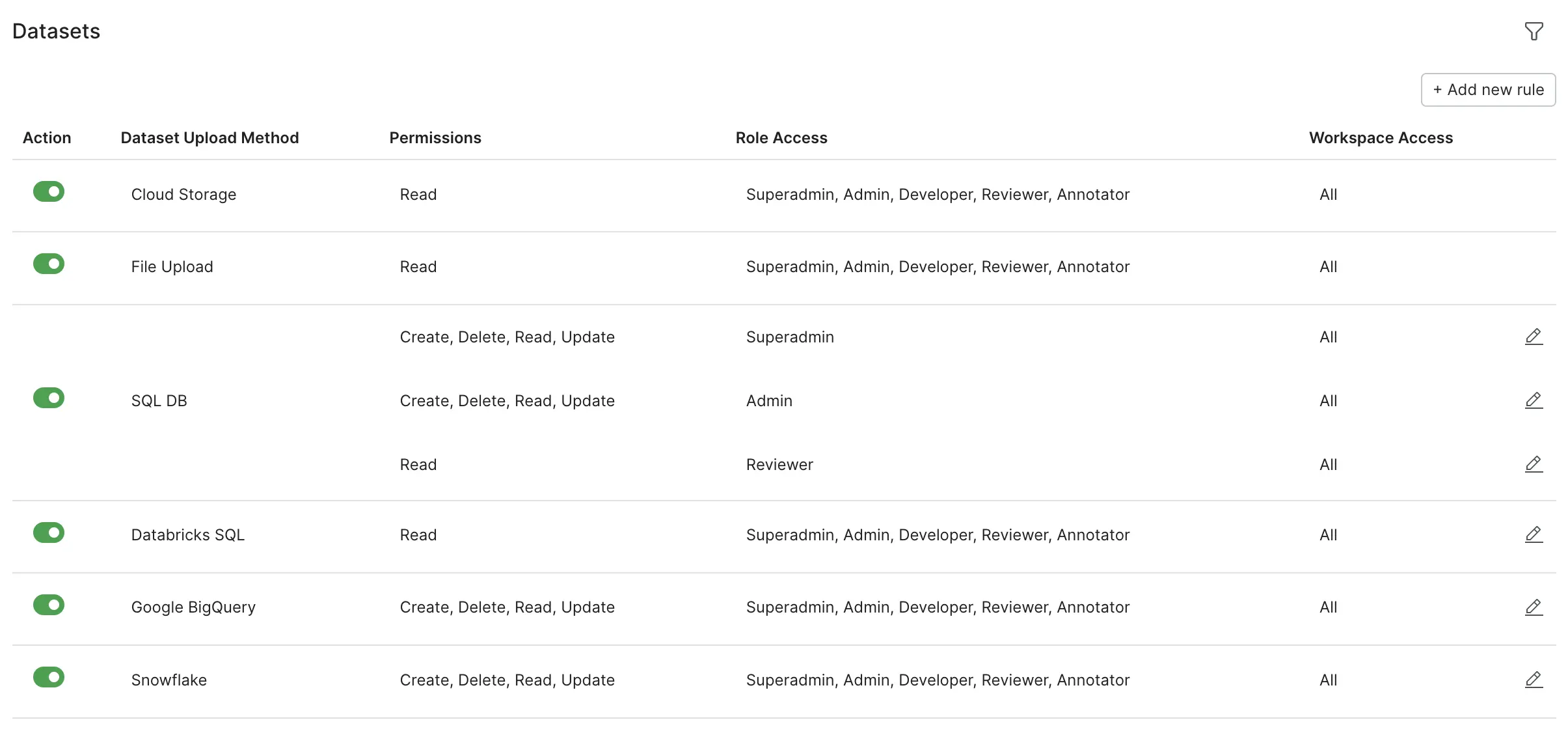

Default rules

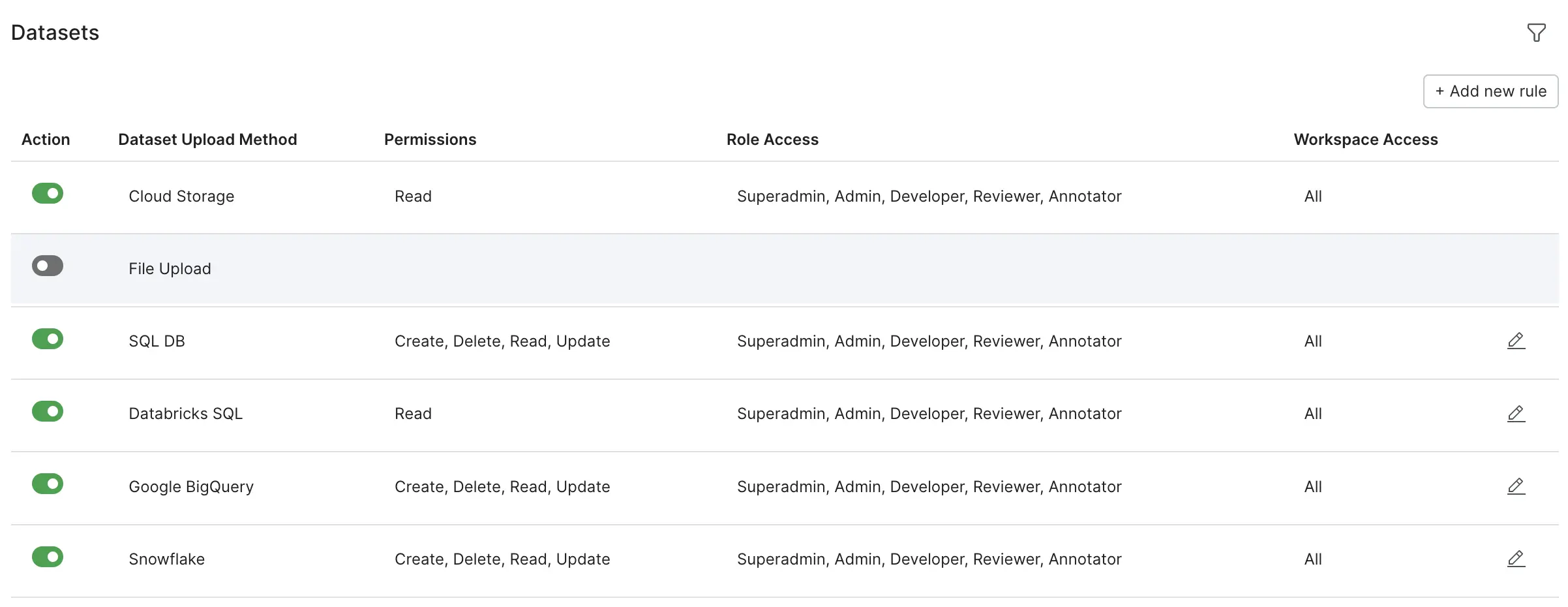

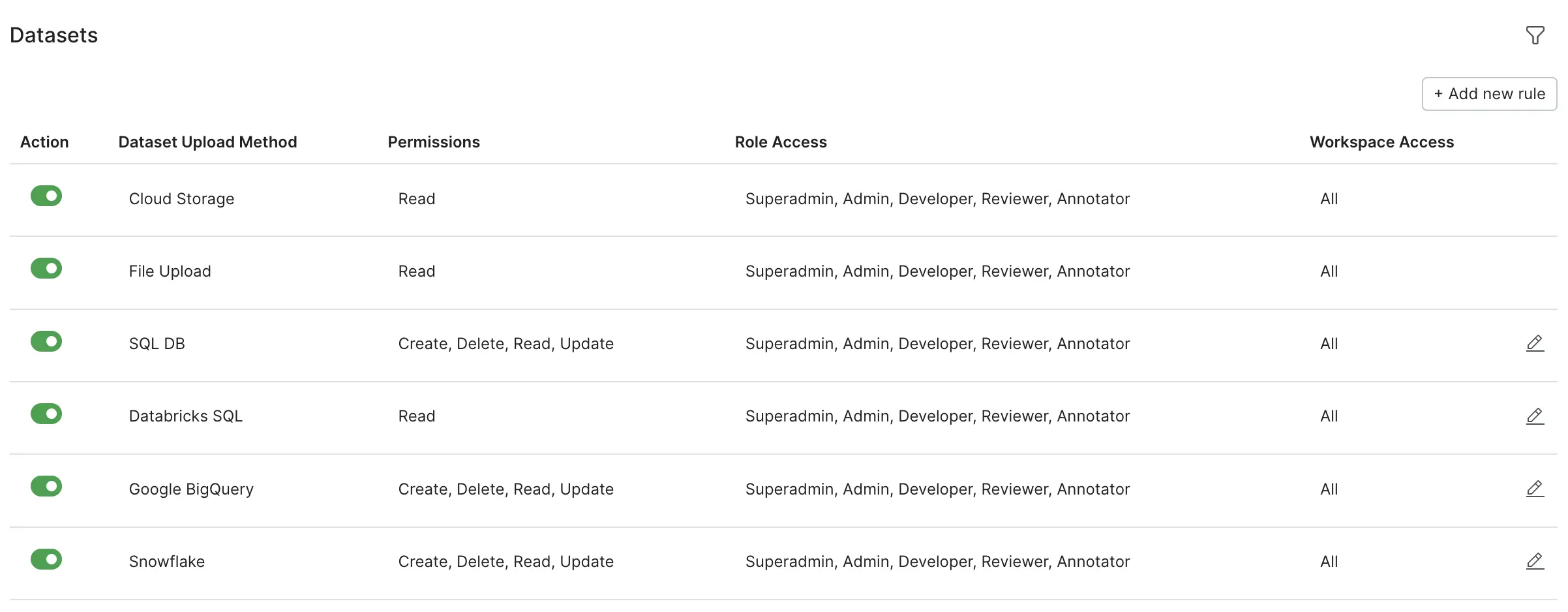

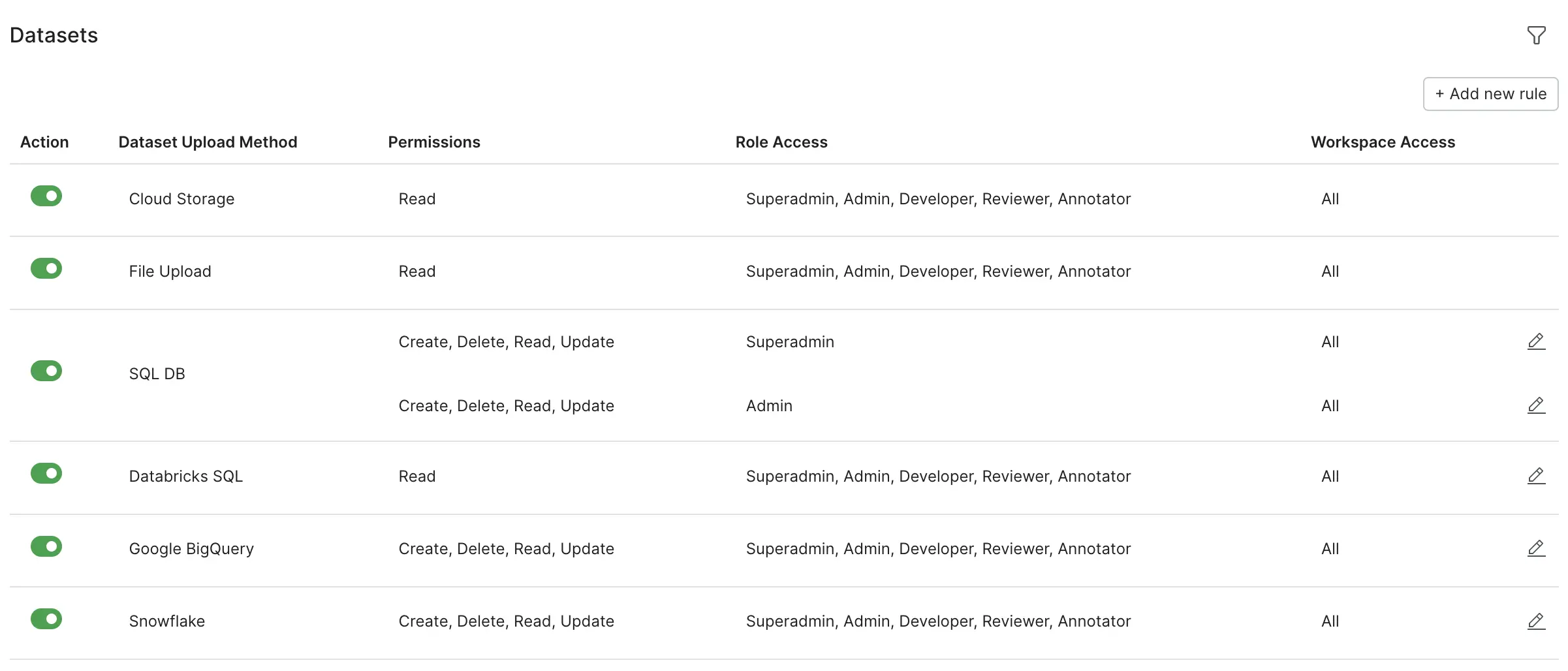

By default, Snorkel Flow allows full access to each dataset upload method:

- Cloud Storage

- File Upload

- SQL DB

- Databricks SQL

- Google BigQuery

- Snowflake

The permissions that are available differ based on the dataset upload method:

- For Cloud Storage and File Upload, the only available permission is Read.

- For SQL DB, Databricks SQL, Google BigQuery, and Snowflake, the permissions are Create, Delete, Read, and Update.

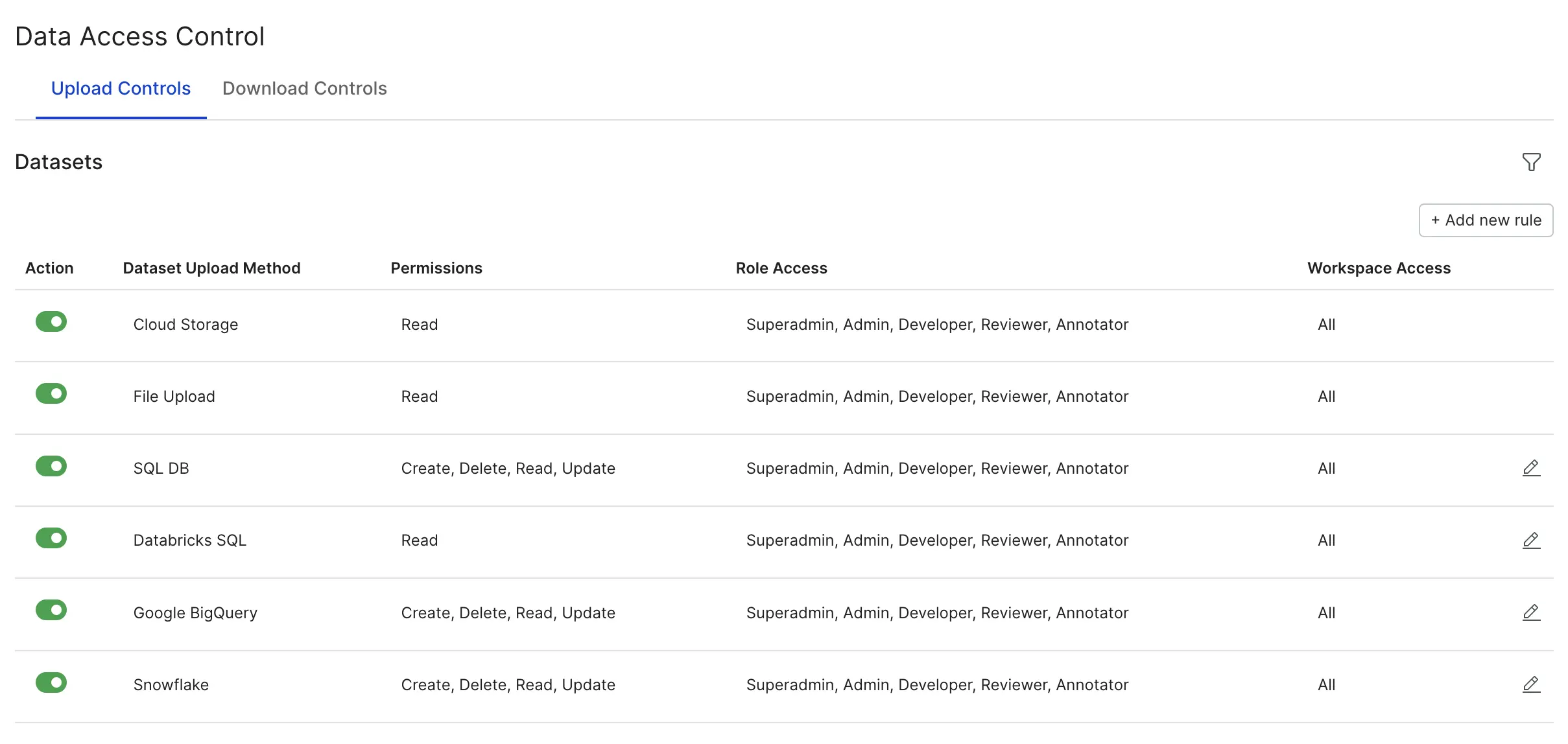

Access the dataset access control page

Follow these steps to access the Dataset Upload - Access Control page:

- Click your user name on the bottom-left corner of your screen.

- Click Admin settings.

- Click Data under Access Controls.

Manage data connectors

This section walks through how you can:

- Disable dataset upload methods across your Snorkel Flow instance

- Enable dataset upload methods across your Snorkel Flow instance

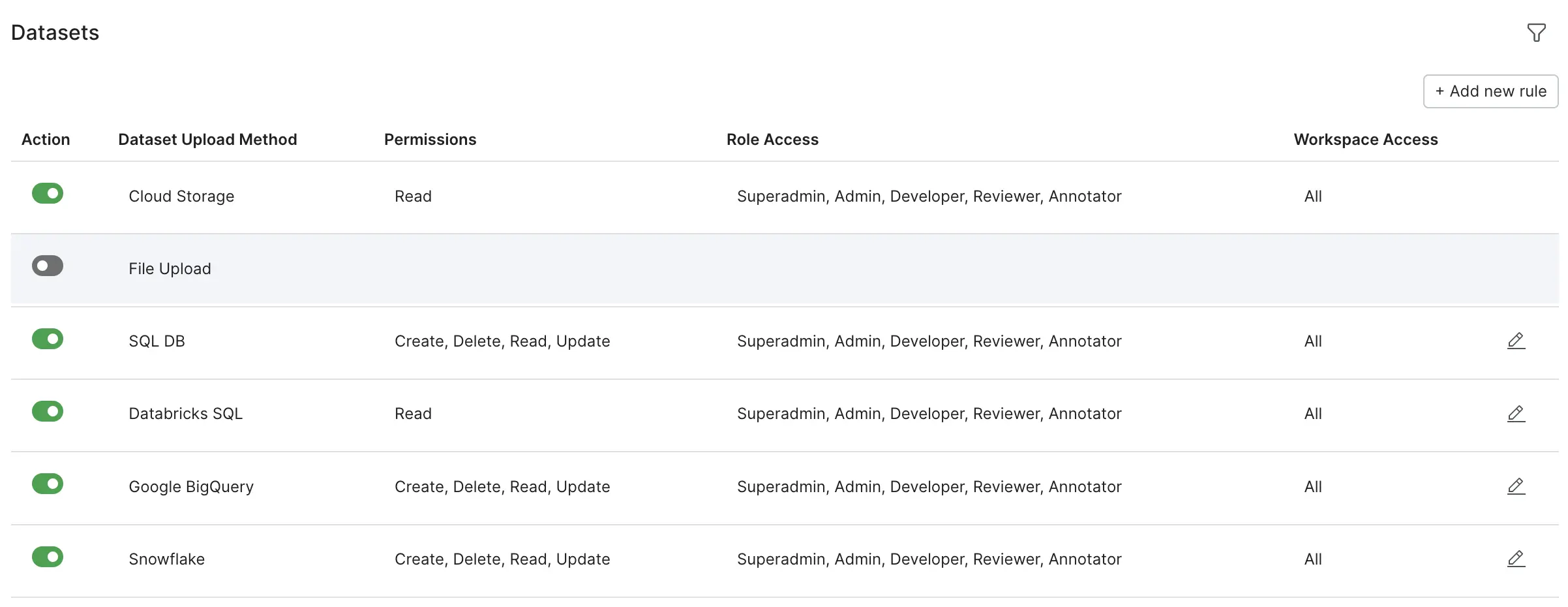

Disable a dataset upload method for entire Snorkel Flow instance

Let's say that we want to disallow local data upload for the platform. Follow these steps to disable the File Upload dataset upload method:

- Navigate to the Dataset table in Upload Controls: click your user name on the bottom-left corner of your screen, click Admin settings, then click Data.

- Click the toggle in the Action column for File Upload. This will bring up the following confirmation dialog.

- Click Disable to disable the specified data connector (in this example, File Upload).

The disabled data connector will be reflected during dataset creation. As you can see below, the File Upload option is grayed out.

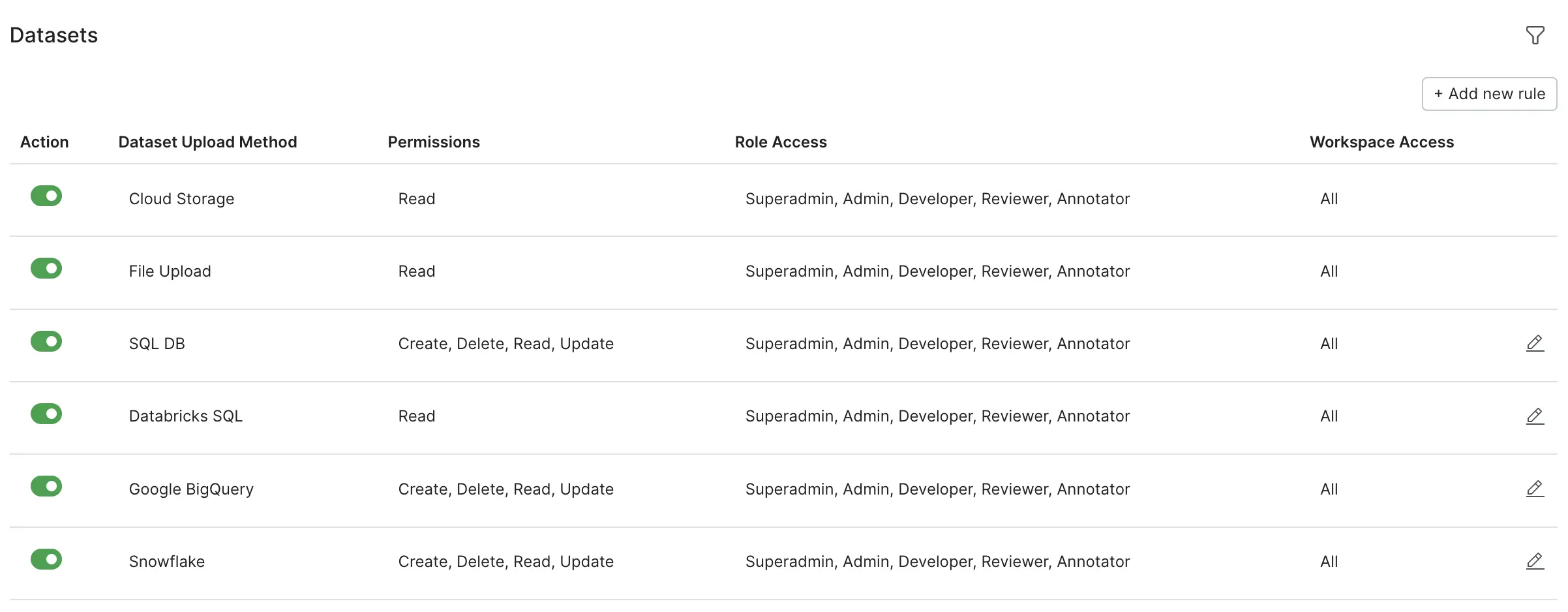

Disabling a data connector will delete all existing rules that have been created. When reactivating a dataset upload method, the default role is populated again (i.e., full permissions for all roles for all workspaces).

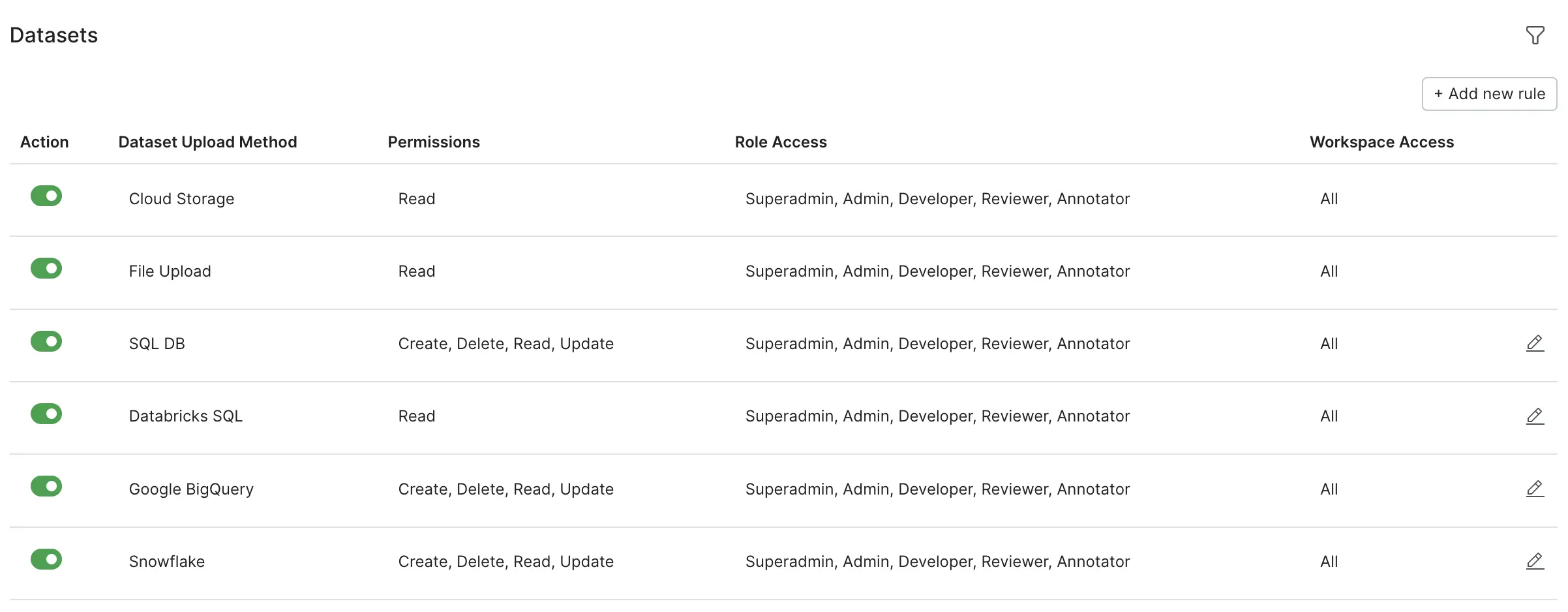

Enable a dataset upload method for entire Snorkel Flow instance

Let's say that we've disabled the File Upload dataset upload method, but now want to re-enable it. Follow these steps enable local data upload:

- Navigate to the Dataset Upload - Access Control page: click your user name on the bottom-left corner of your screen, click Admin settings, then click Data.

- Click the toggle in the Action column for File Upload.

The enabled data connector will be reflected during dataset creation. As you can see below, the File Upload option is not grayed out.

Share data connector credentials securely in-platform

This section walks through how you can:

Create a data connector configuration

Follow these steps to create a data connector configuration:

-

In the left-side menu, click Datasets.

-

In the top left corner of the Datasets page, click + Upload new dataset. Currently, we support storing connector credentials for the following connectors:

- SQL DB

- Databricks SQL

- Google BigQuery

- Snowflake

-

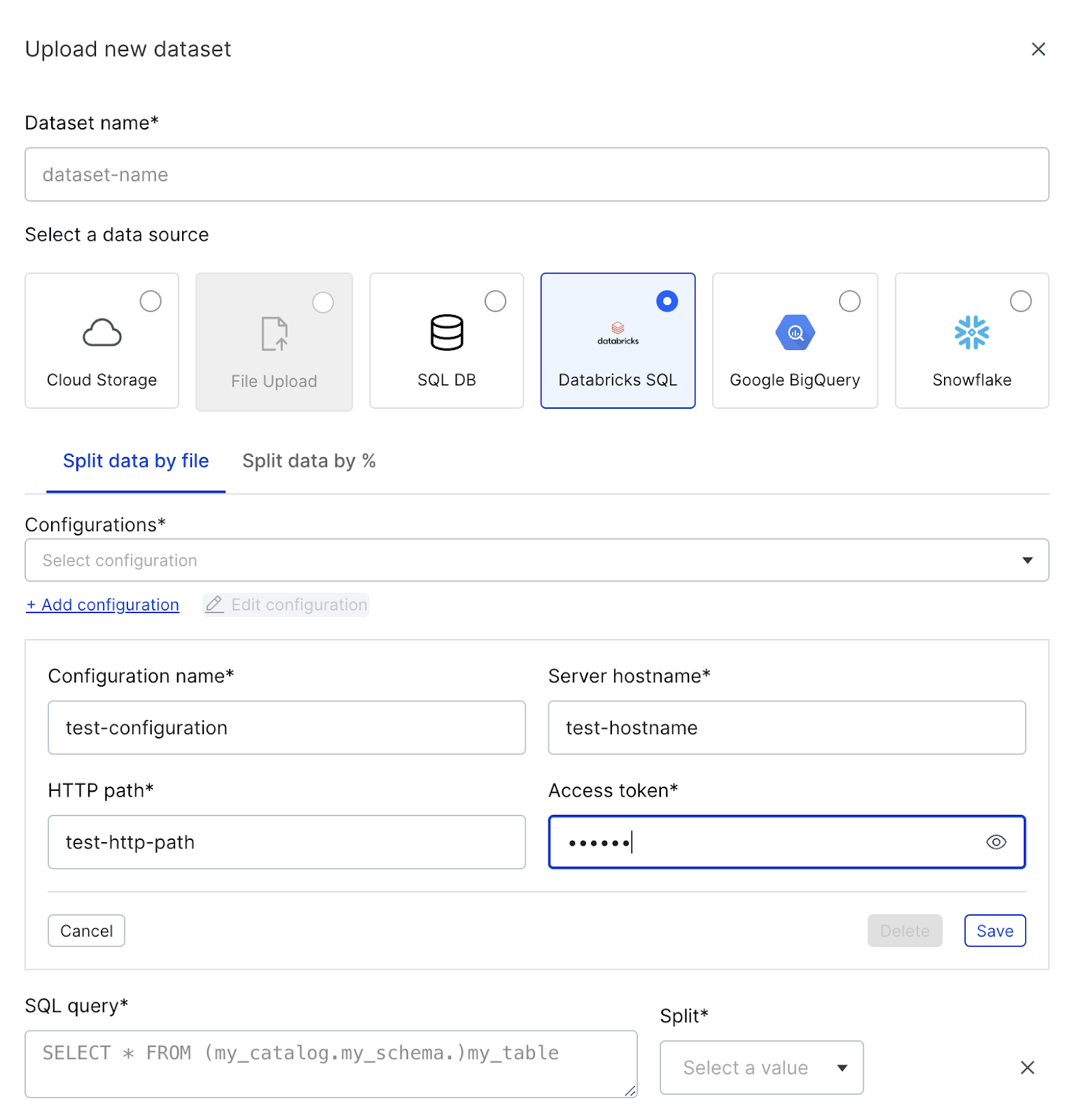

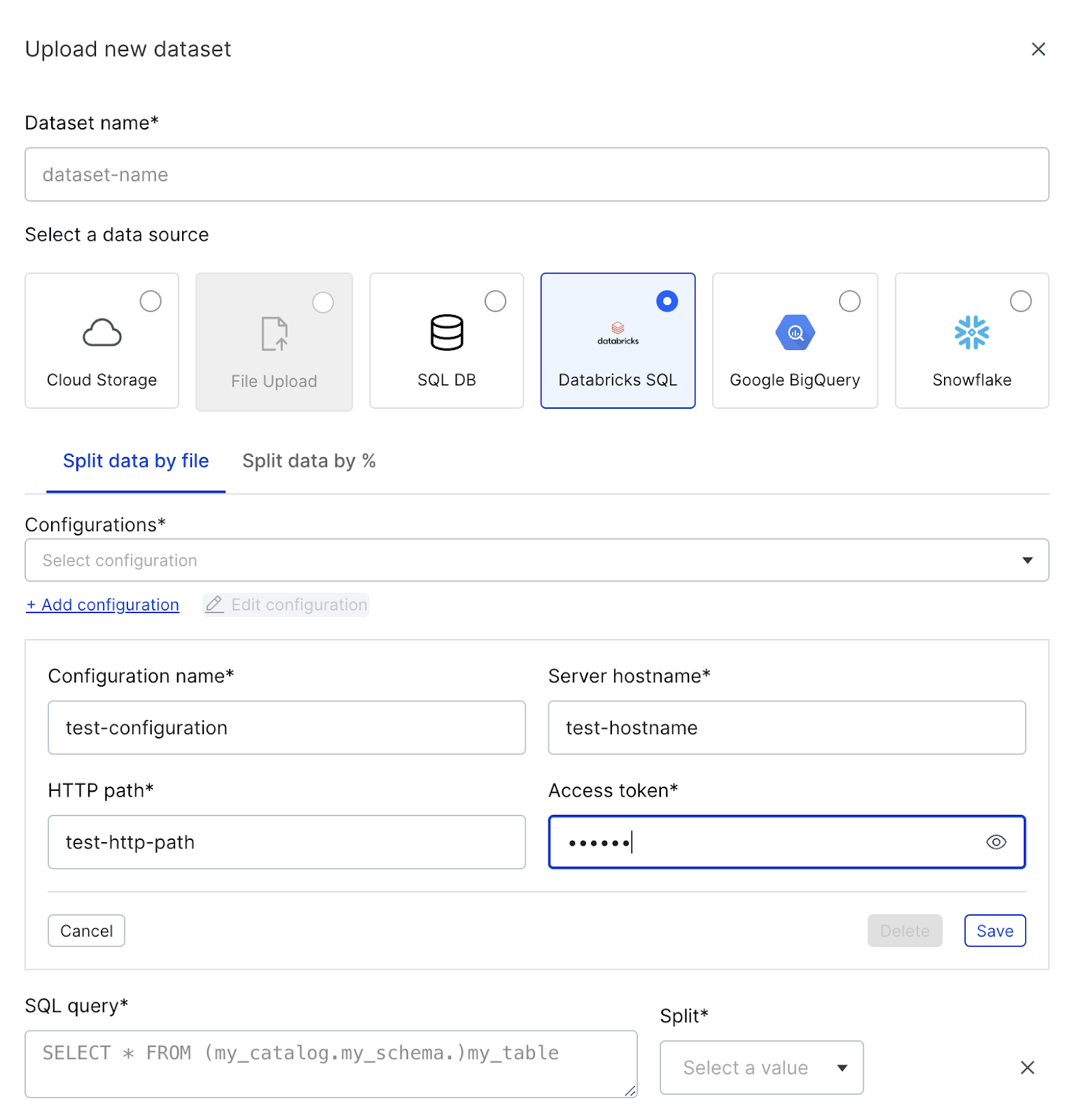

Pick one of the dataset upload methods, then click + Add configuration. You will see configuration fields pop up on your screen. Different connector configs will have different fields that are relevant for the connector. The example below shows the configuration options for the Databricks SQL connector.

-

Fill out the configuration fields, then click Save.

-

Once saved, the configuration will be available for everyone with Read access to configs in that particular workspace.

Users with Create, Delete, Read, Update permissions will be able to modify the configuration, but will never be able to retrieve the secrets field from the configuration (e.g., the Access token field for DatabricksSQL).

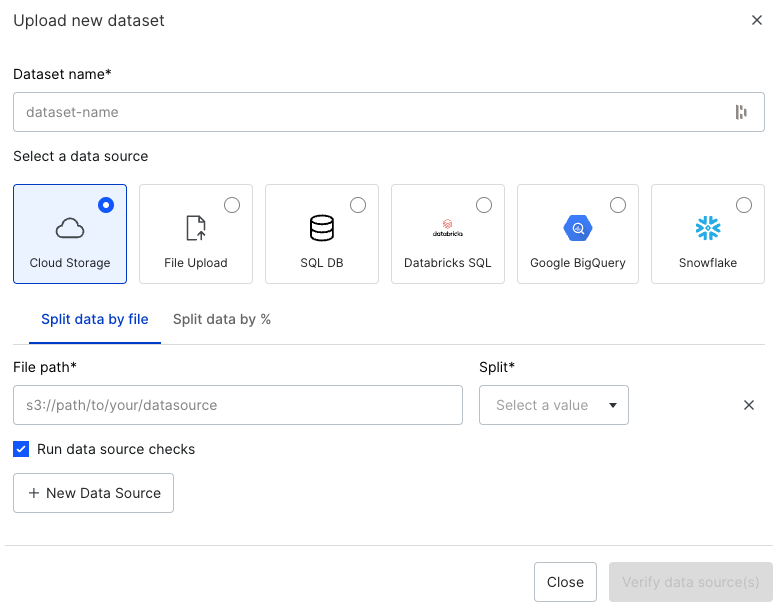

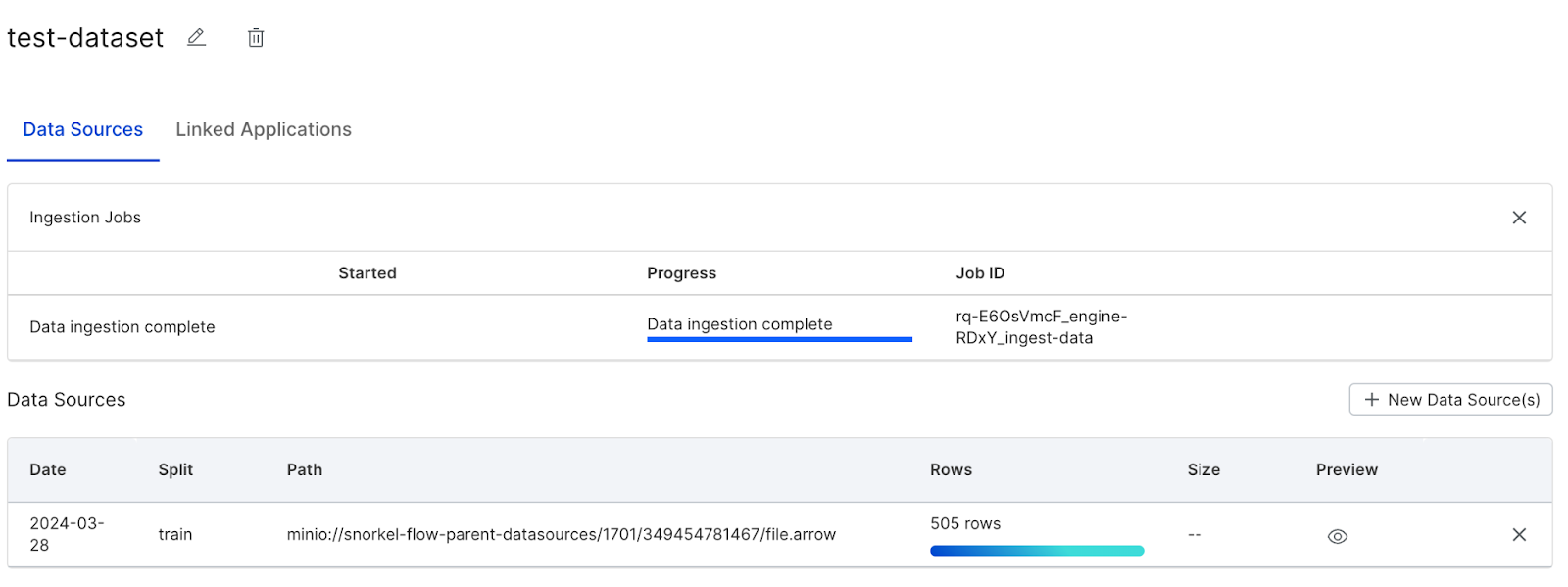

Ingest a data source with an existing configuration

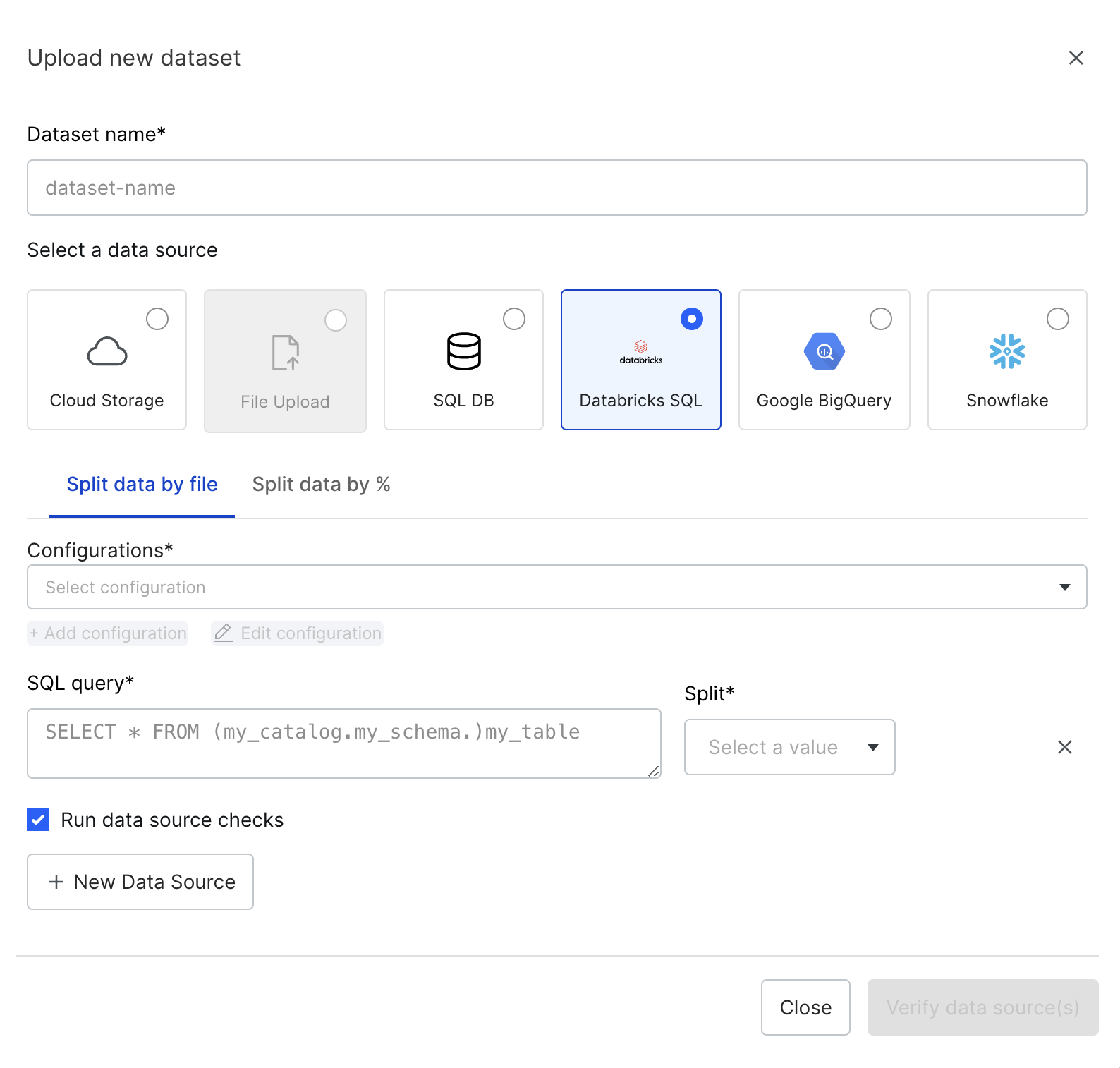

Users with Read access cannot create a new configuration, but can ingest any existing configurations. For example, let's say that we are a user that wants use an existing Databricks SQL configuration.

- Click Datasets in the left-side menu to open the Datasets page.

- Click + Upload new dataset to begin data creation.

- Under Select a data source, click Databricks SQL.

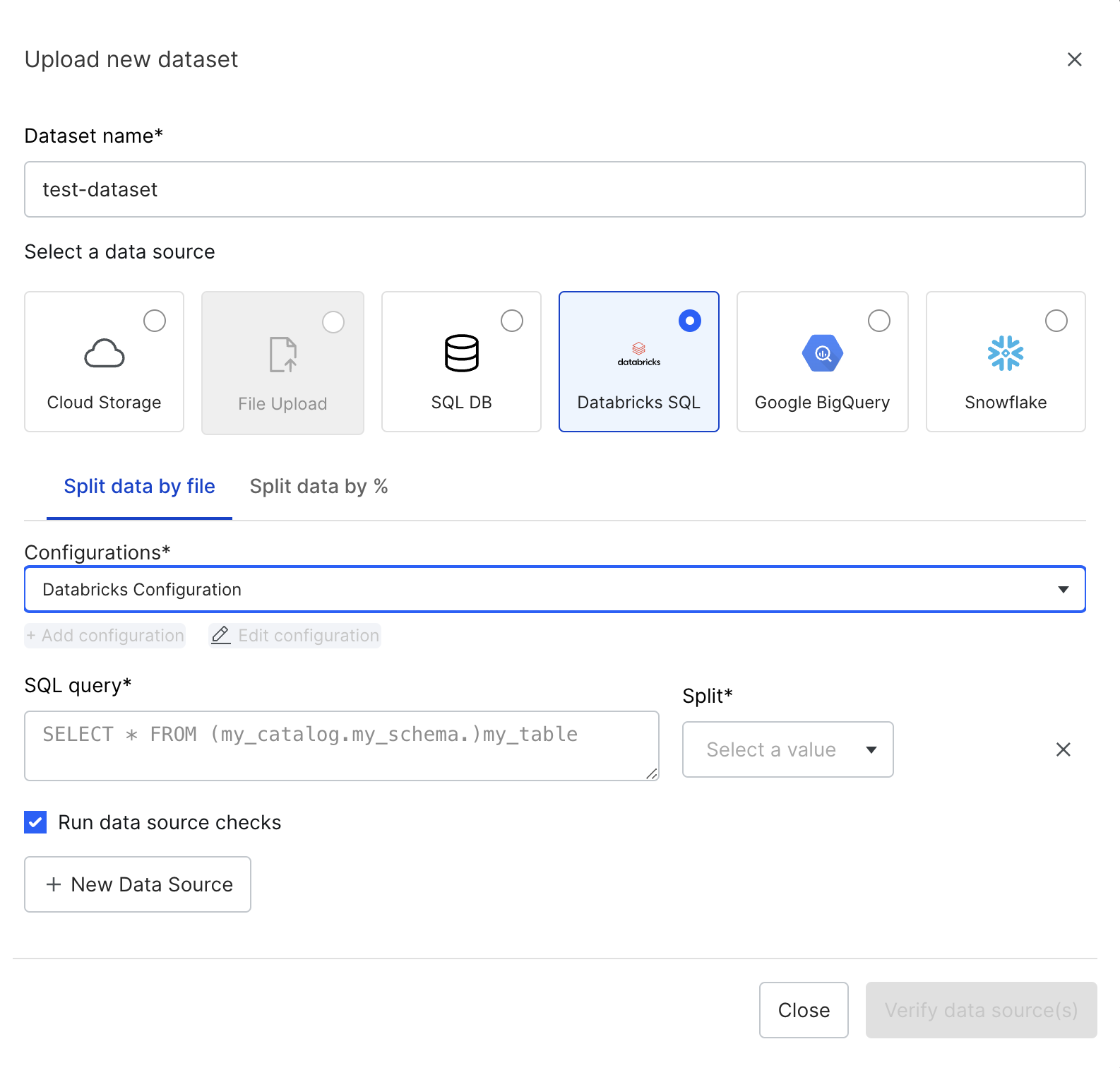

- Give the dataset a name (in this example, test-dataset).

- Select Databricks Configuration from the Configurations drop down.

- In the Configurations drop down, you will see all configs that are available to you in the workspace.

- Notice that the Edit configuration button is grayed out as you don’t have permission to see or edit it.

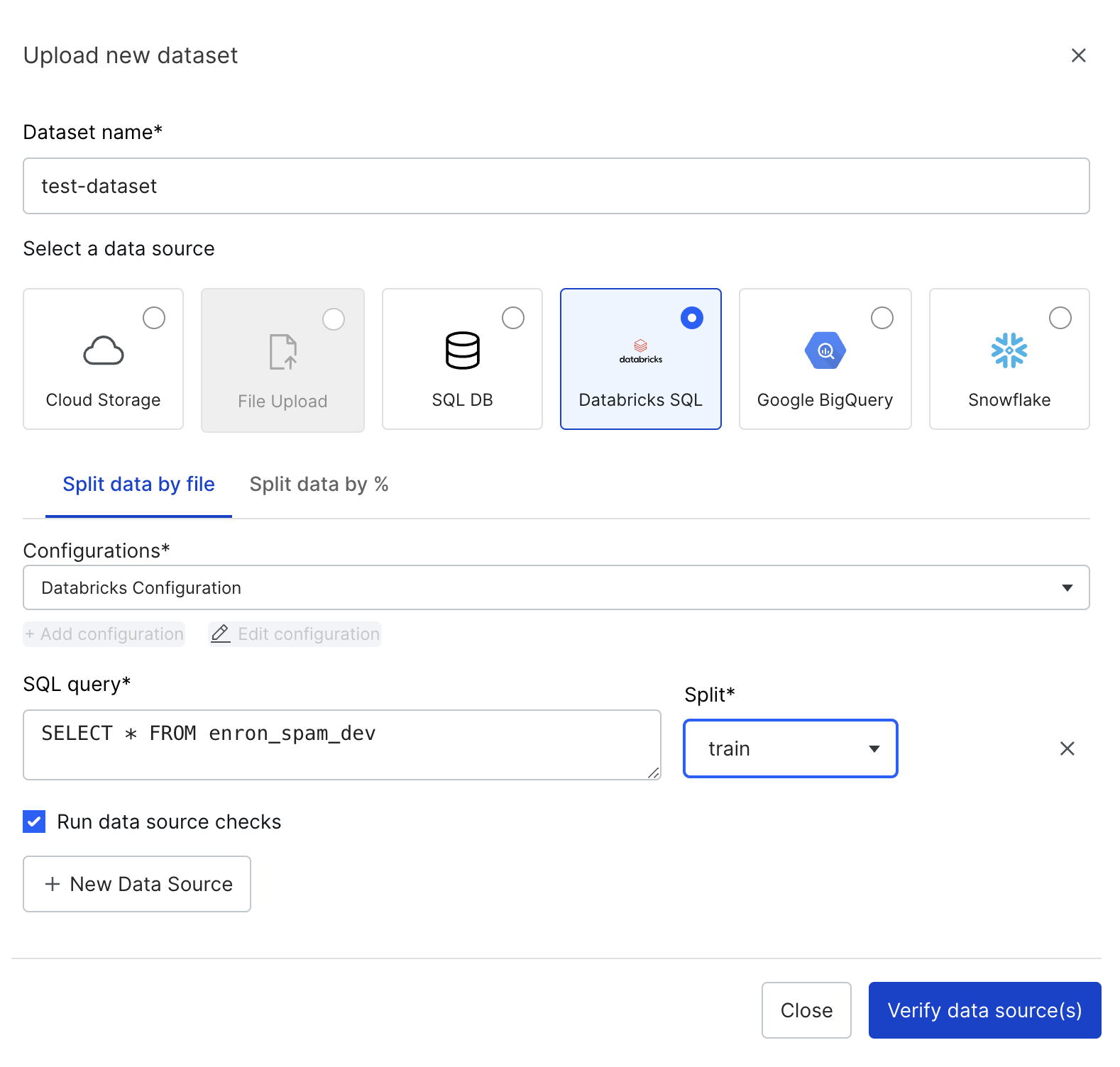

- Finish the standard flow for data ingestion (e.g., enter a SQL Query and specify a data Split), then click Verify data source(s).

Notice that the data has been successfully ingested from Databricks, without the need to provide server details or access credentials.

Fine grained data connector rules for different roles

This section walks through how you can:

Create or update rules

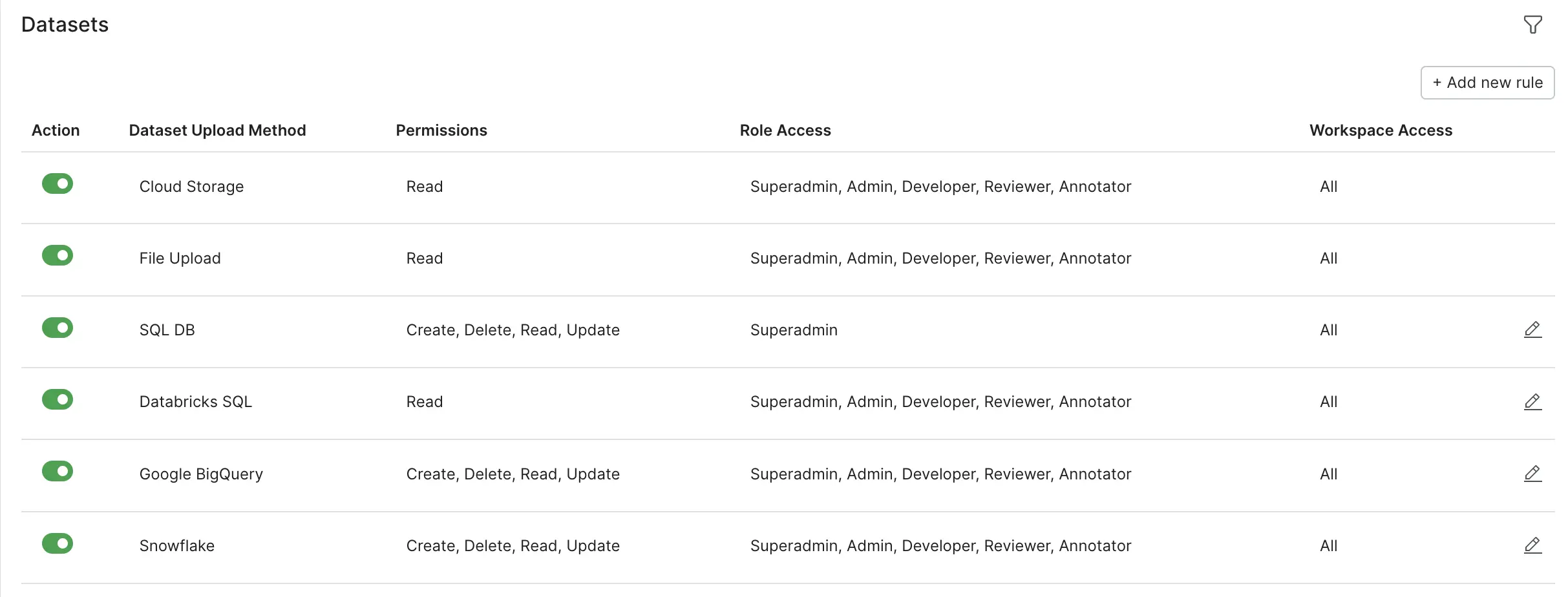

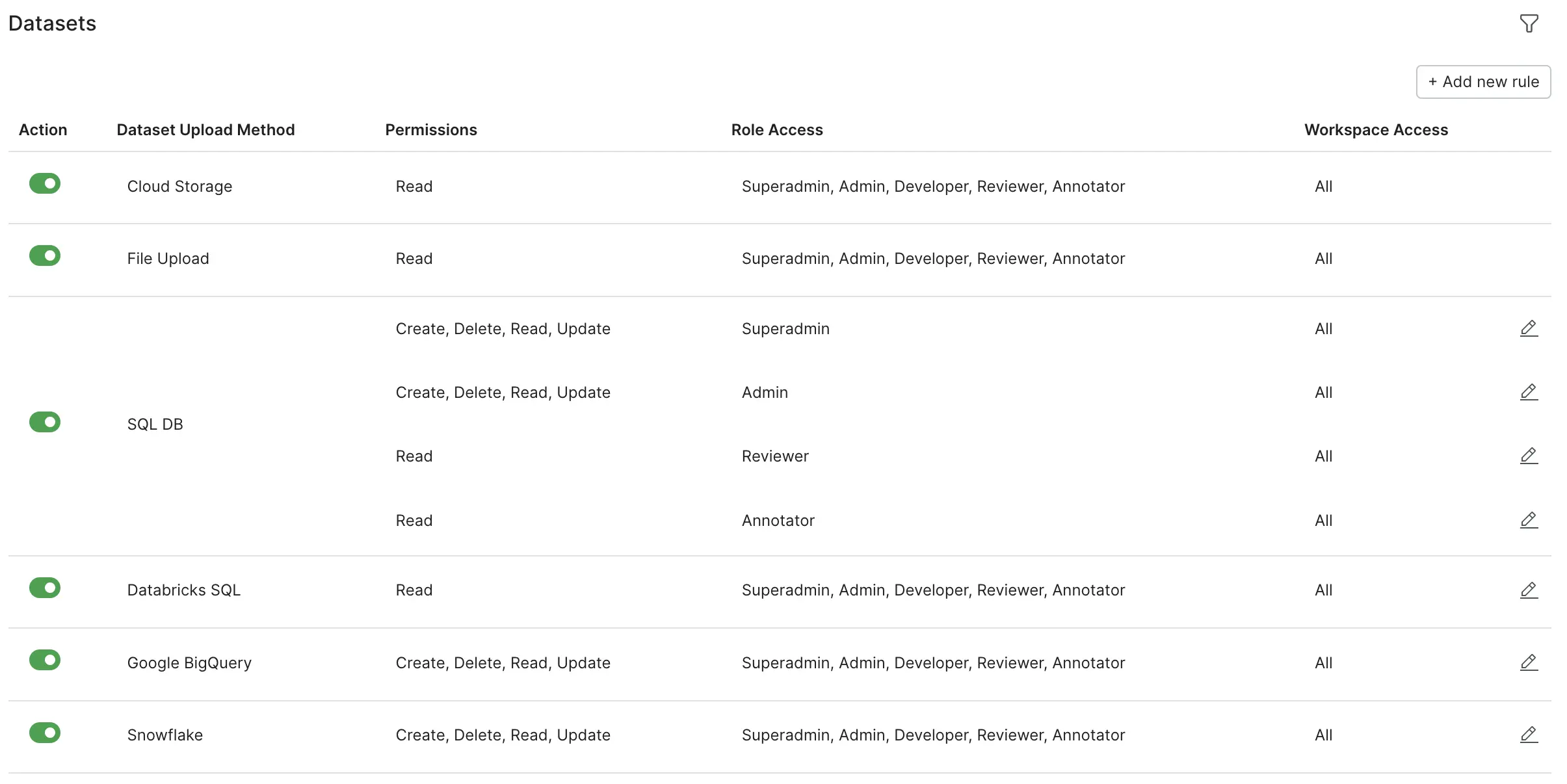

There may be situations in which you'll want to assign different levels of permissions to different roles. For example, let's say that superadmins and admins should be the only ones able to create, delete, and update configurations for SQL DB. Annotators and reviewers should still be able to read and use the configurations for data ingestion, and developers should not be able to read or use the configurations. Follow these steps to create the necessary rules:

- Navigate to the Dataset Upload - Access Control page: click your user name on the bottom-left corner of your screen, click Admin settings, then click Data.

- In this example, we want to edit the initial, pre-populated rule for SQL DB. To do this, click the pencil icon for the SQL DB rule.

- Select Superadmin in the Role access drop down.

- Click Save to save the rule. This will automatically bring you back to the Data Access Control page.

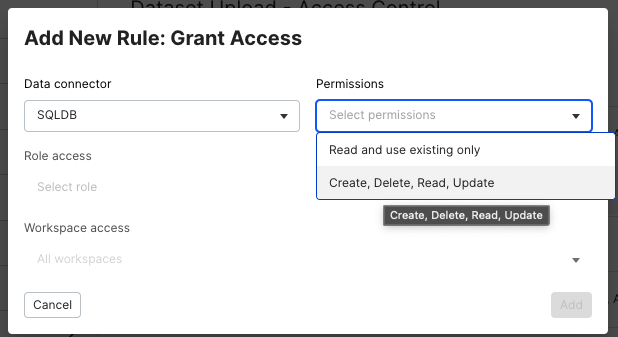

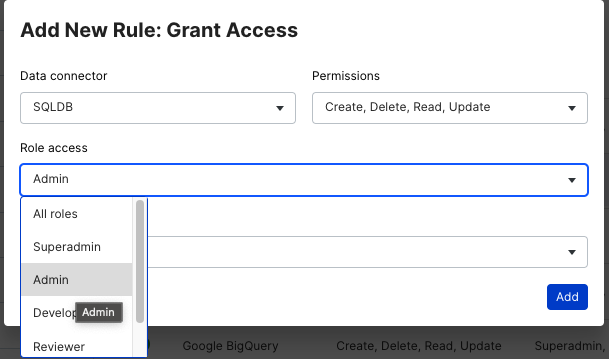

- Next, let’s create a new rule to allow create, read, update, and delete permissions for admins. Click + Add new rule in the top-right corner of the Dataset Upload - Access Control page. This will bring up a dialog to create a new access rule.

- Select SQLDB in the Data connector drop down.

- Select Create, Delete, Read, Update in the Permissions drop down.

- Select Admin in the Role access drop down.

- Click Add to save the new rule. This will automatically bring you back to the main page.

Similarly, you can create rules for Read permissions for annotators and reviewers. Note that if there is no rule for the developer role for a particular workspace, then developers will not have access to the data connector. This means that the data connector option will be grayed out for them during dataset creation.

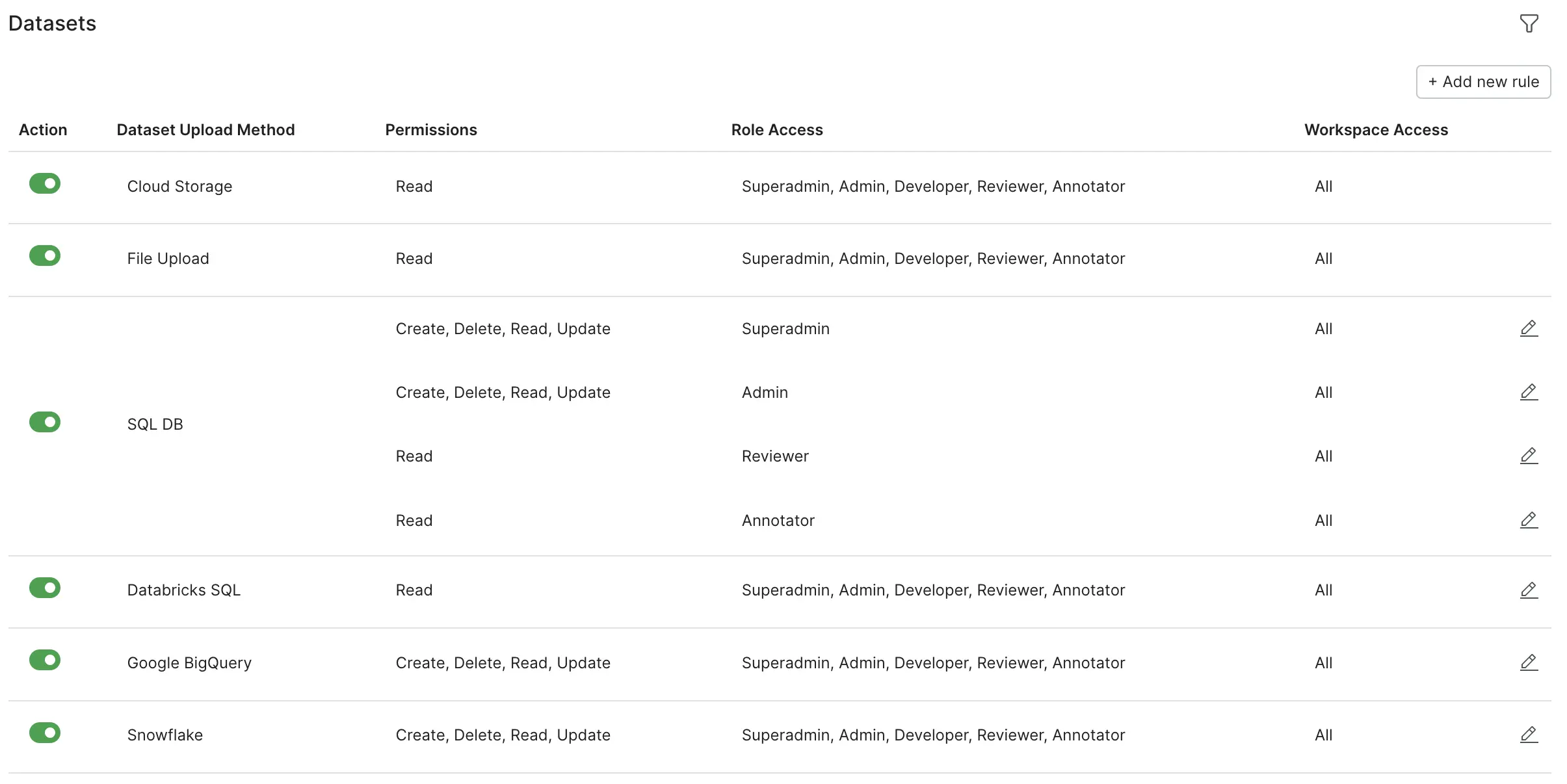

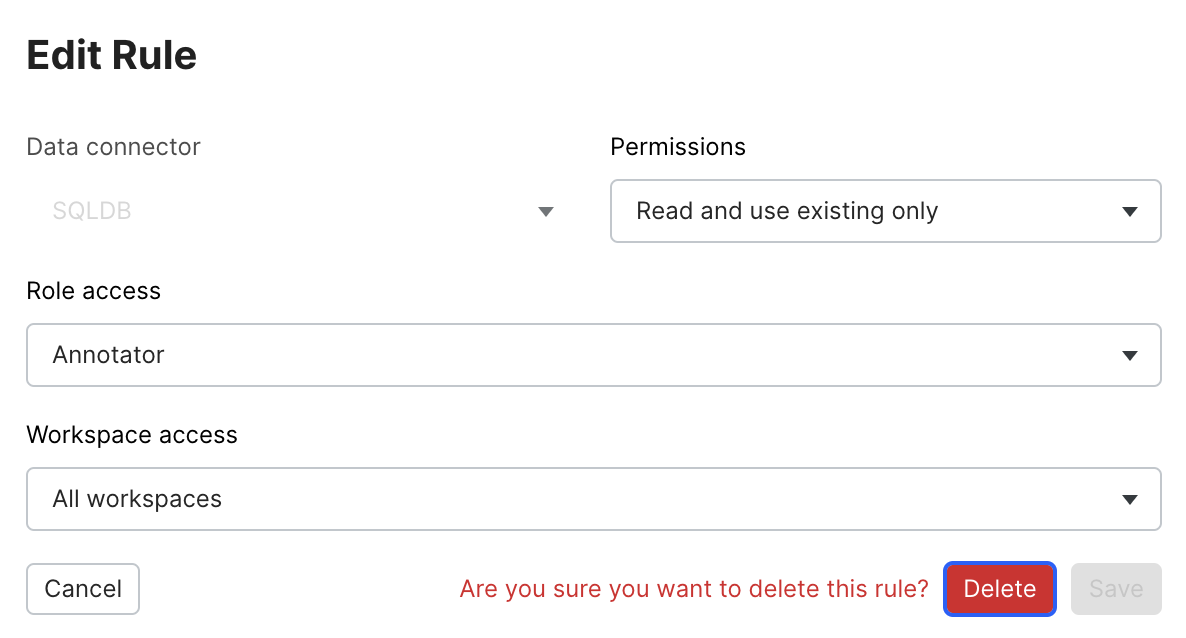

Delete rules

Let's say that we no longer want a rule that enables annotators Read access to the SQL DB. Follow these steps to delete the rule:

- Navigate to the Dataset Upload - Access Control page: click your user name on the bottom left corner of your screen, click Admin settings, then click Data.

- Click the pencil icon next to the rule you wish to remove. In this example, we will select the SQL DB Annotator Rule with Read Permissions.

- Click Delete. This will bring up a confirmation message.

- Click Delete one more time to delete the rule.