Prompt builder (SDK)

Prompt Builder offers the ability to precisely inject Foundation Model expertise into your application via an interactive workflow.

Typically, you will use the UI to see how a prompt is performing and create new labeling functions (LFs) from the prompt (learn more about the prompt builder here). However, prompt LFs can be previewed and created through the notebook as well.

Prompt builder supports the following application types: text classification (both single and multi-label), sequence tagging and PDF candidate-based extraction.

Previewing a prompt LF

To begin, import the third version of our SDK, as well as grab the model node for the specific application that you are working out of:

import snorkelflow.client_v3 as sf

ctx = sf.SnorkelFlowContext.from_kwargs() # Import context to our current application

APP_NAME = "APPLICATION_NAME" # Name of your application

NODE_UID = sf.get_model_node(APP_NAME) # Model node used in the application

You can now preview a prompt LF:

sf.fm_suite.preview_prompt_lf(

NODE_UID,

model_name="google/flan-t5-xxl",

split="dev",

num_examples=5,

model_type="text2text_multi_label",

prompt_text="""The possible labels are anova, bayesian, mathematical-statistics, R.

What is the most likely label for the following document: {Body_q} {Body_a}\n\nLabel: """,

)

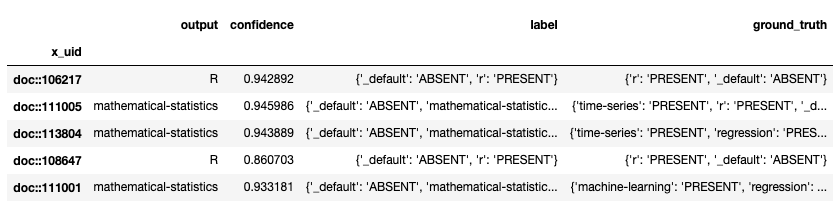

This will show the result of running that prompt LF using 5 examples in the dev split:

- output: the output of the LLM used, in this case google/flan-t5-xxl.

- label: the in-Snorkel label that is assigned by the code mapper based on the LLM output. A default code mapper is provided for each supported application type (basic keyword mapping), but a custom one can be passed using the output_code parameter

- ground truth: the actual label.

To get more information about each of the parameters, run:

sf.fm_suite.preview_prompt_lf?

Creating a prompt LF

Once you're satisfied with the preview of the prompt LF, you can create it by calling create_prompt_lf. Here's how you can create the above LF:

sf.fm_suite.create_prompt_lf(

NODE_UID,

model_name="google/flan-t5-xxl",

lf_name="my awesome prompt LF",

model_type="text2text_multi_label",

prompt_text="""The possible labels are anova, bayesian, mathematical-statistics, R.

What is the most likely label for the following document: {Body_q} {Body_a}\n\nLabel: """,

)

Note that all the parameters stay the same, except that you have to provide an lf_name. You don't have to specify splits because the LF votes on all splits by default. As before, you can see all parameters available by running:

sf.fm_suite.create_prompt_lf?

If you go to Studio (in the left-side menu) for the same node, you should now be able to see the newly created LF under Labeling Functions (most likely under In Progress).