Prompt builder

Prompt builder offers the ability to precisely inject foundation model expertise into your application through an interactive workflow.

To open the prompt builder, click the Prompts icon and text within the LF composer toolbar as shown below. If you do not see the icon, ensure that the application type is supported. Application types that are currently supported include:

- Text classification (both single and multi-label)

- Sequence tagging

- PDF candidate-based extraction

As a user, you should feel empowered to try out any prompt engineering technique and observe how well it performs on your data. Once you are happy with the prompt, click Create prompt to capture this signal inside a Snorkel labeling function (LF). This enables you to go further by building with additional signal to surpass the original prompt scores.

Configuring available models

Prompt builder workflows rely on third party external services to run inference over your data. In order to configure these, you will need to use an account either from your organization, or request one from a Snorkel representative. Once an account has been established, you can configure the instance wide settings for the prompt builder according to the instructions in Using external models.

Prompting workflows

There are two different prompt builder workflows that you can experience depending on the task. These workflows are:

- Freeform

- Question answering

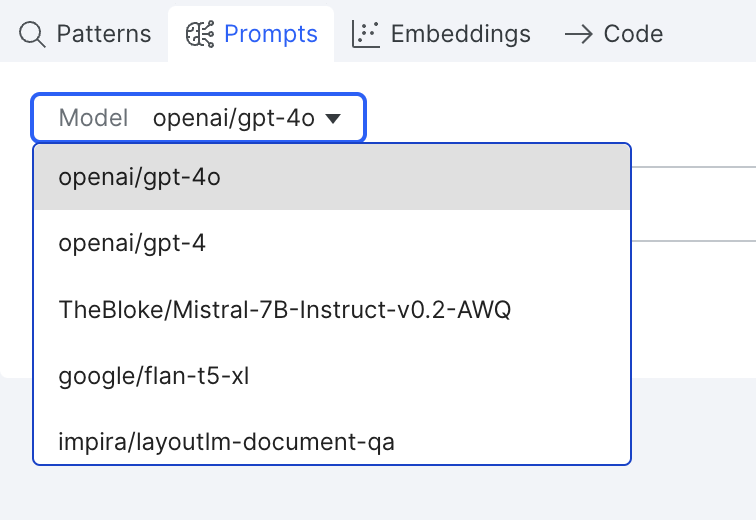

One common option across all workflows is the ability to select your chosen foundation model. There will be different models available for each workflow, but all models must be first configured as detailed in Using external models.

In all workflows, you should first start with a prompt to send to the model. Click Preview prompt, and then analyze the streaming results live as the model processes a sample of the current data split.

At any point, you can cancel the running job and progress will be saved. Clicking Resume will begin sending data to the model again for processing. Once you are satisfied with the LF performance on your current data split, click Create prompt to run the prompt over all of your active data sources.

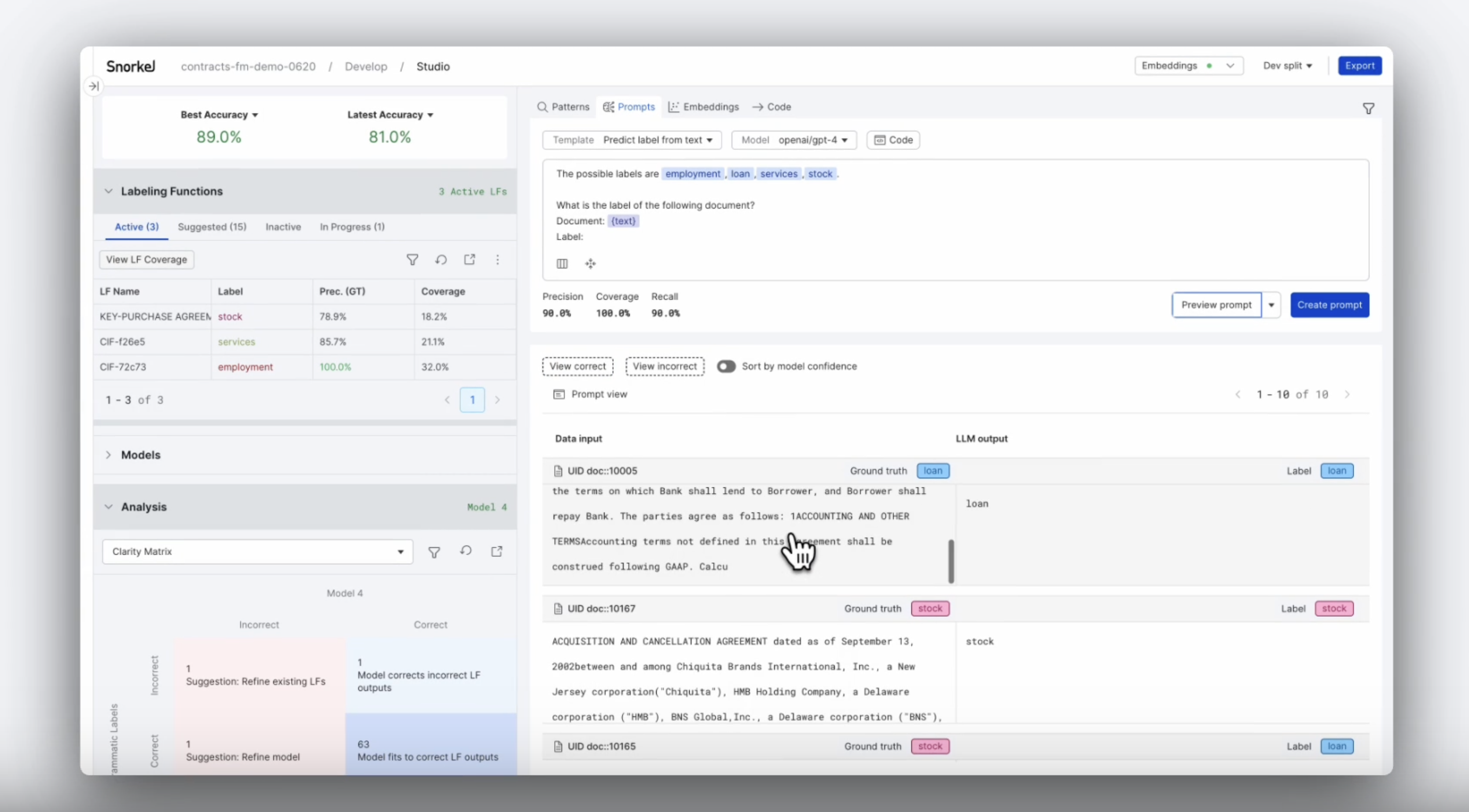

Freeform prompt workflow

The freeform workflow provides a flexible way to prompt engineer your data where any instruction can be passed to the foundation model. There are some template examples that are pre-populated for you. However, you should feel empowered to explore a variety of prompt engineering techniques.

In the workflow, you can fully specify both the prompt that you want to send to the model, including how the context of each example appears, along with the code to map the foundation model output to your classes.

In the screenshot above, "{text}" refers to the text field within your dataset. In each request to the model, any field name appearing between braces will be replaced with the value for that field in that example. This allows you to specify multiple fields in a single prompt to the model if necessary. In this example, we are asking openai/gpt-4 to label a document into one of four classes (employment, loan, services, or stock) based on the document text.

In the screenshot above, "{text}" refers to the text field within your dataset. In each request to the model, any field name appearing between braces will be replaced with the value for that field in that example. This allows you to specify multiple fields in a single prompt to the model if necessary. In this example, we are asking openai/gpt-4 to label a document into one of four classes (employment, loan, services, or stock) based on the document text.

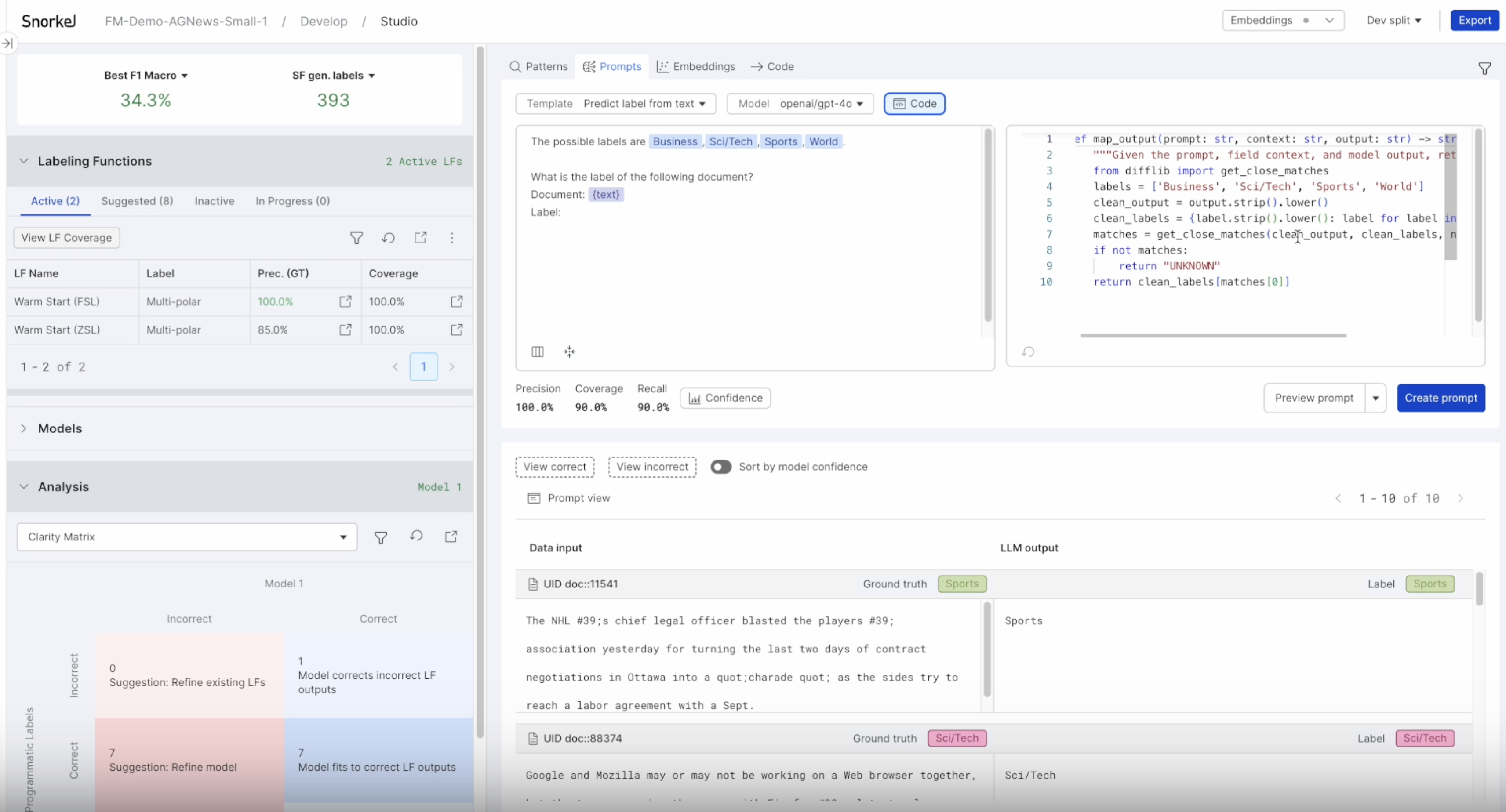

In addition, the freeform workflow allows you to customize the mapping from model output -> label. To do this click the Code icon. There are several templates with pre-defined prompts and code that you can edit to output to label mappings. Upon selecting any of these templates, you can modify each area as you see fit. This gives you significant low-level control over the input and output to the model.

The code that you write in the editor has a few requirements:

- All logic must be contained within the body of a single function.

- The single defined function must include "output" as its only parameter.

- The defined function should return the exact label name when voting for that label. Any other return value (including "None" and "UNKNOWN") will be treated as abstentions for the LF.

Once you make changes to the code, the refresh icon in the bottom left corner of the editor will become enabled. Clicking it will update your metrics and the Code mapped label column to reflect the latest code in the editor.

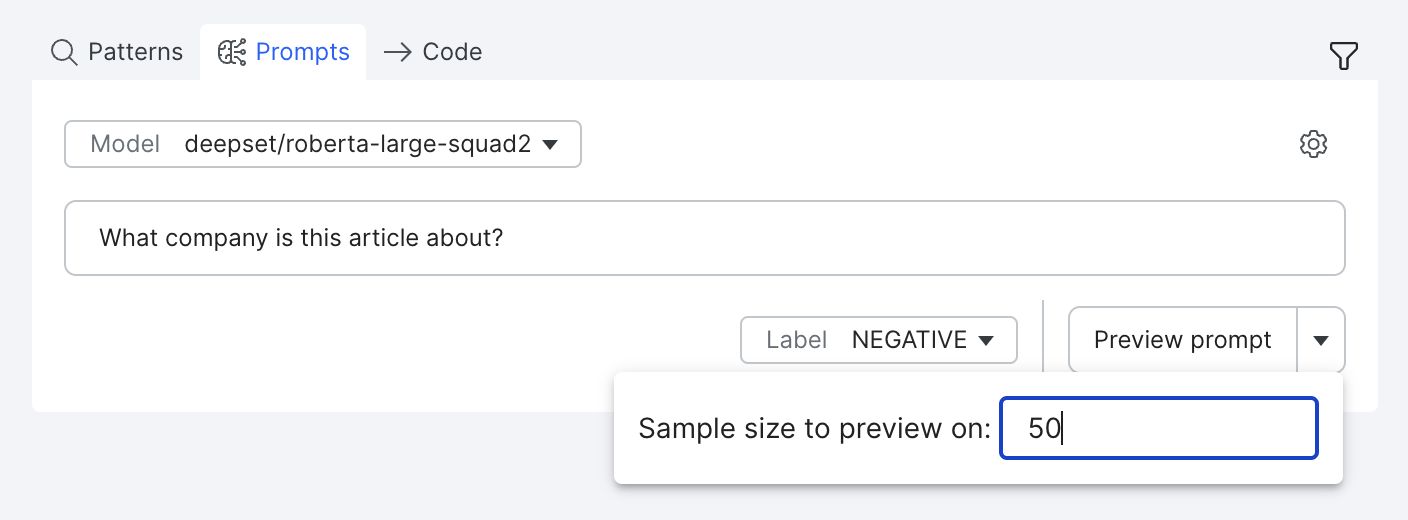

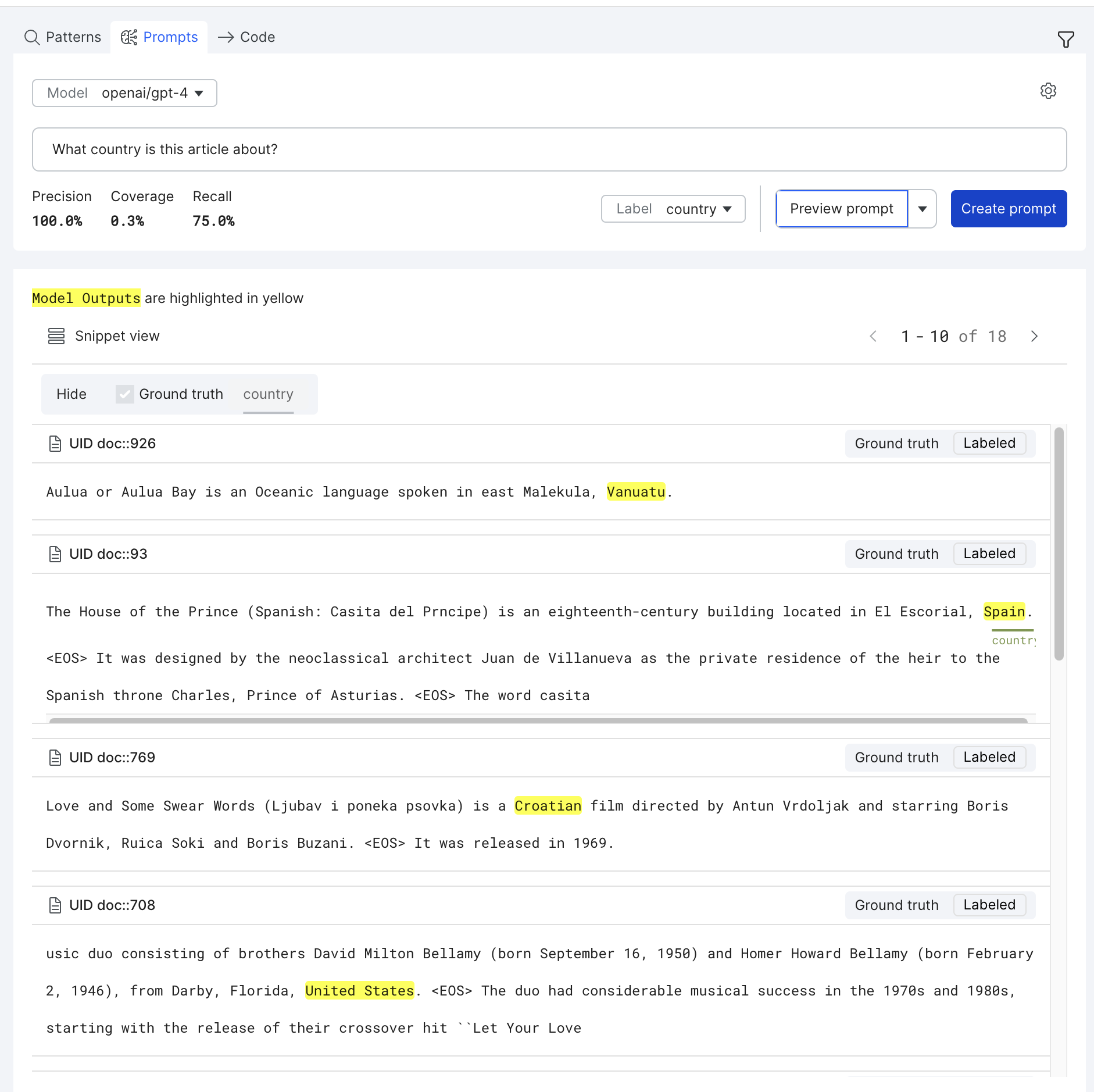

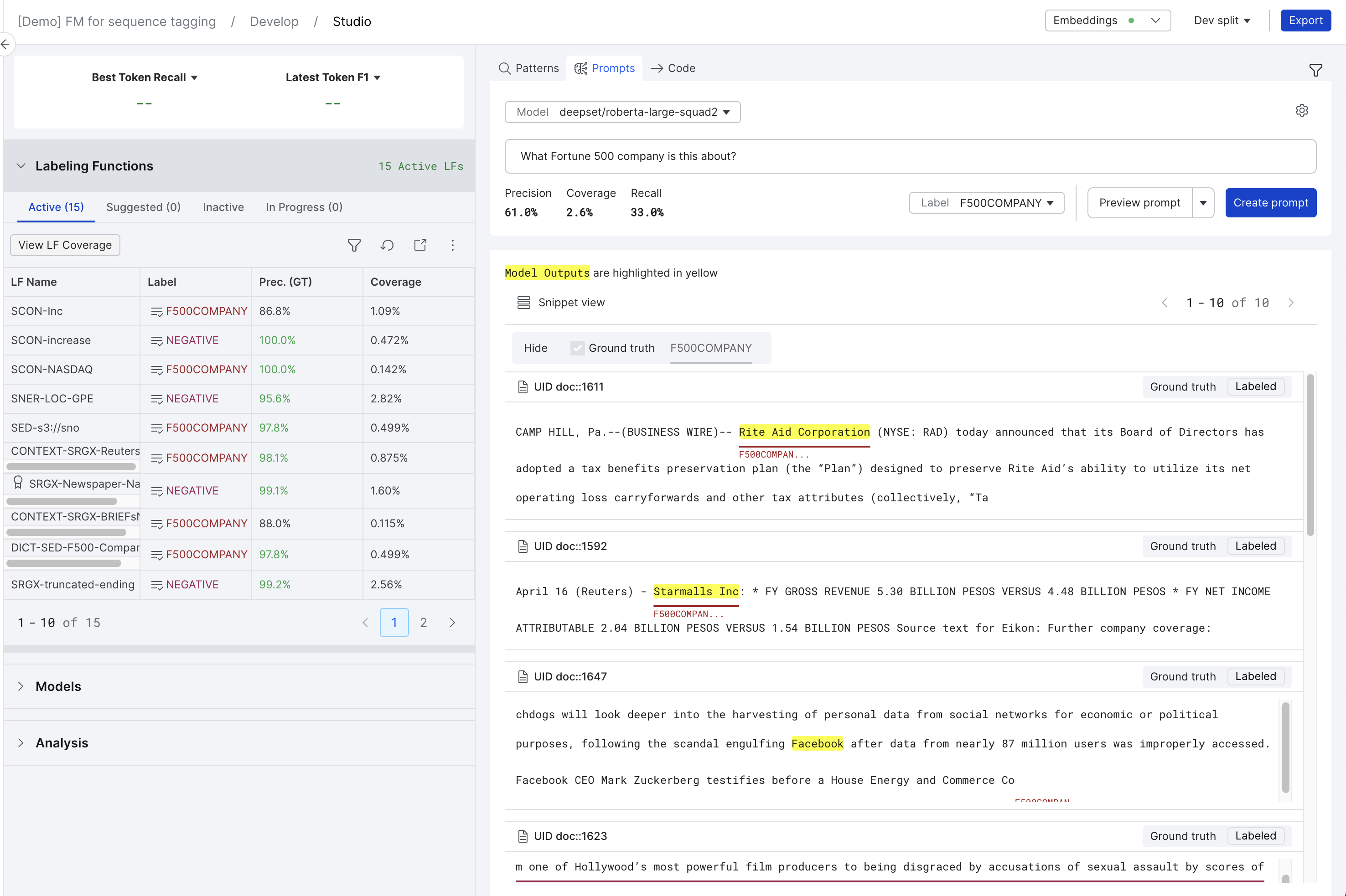

Question answering prompt workflow

The question answering workflow provides a simple interface for extracting information from within your documents. Similar to the freeform workflow, you should feel empowered to explore a variety of prompt engineering techniques to see how well they perform on your data. This workflow involves asking a question about your document. The model then extracts the spans from the text that it thinks best answers your question.

This workflow is enabled for sequence tagging utilizing extractive question answering models:

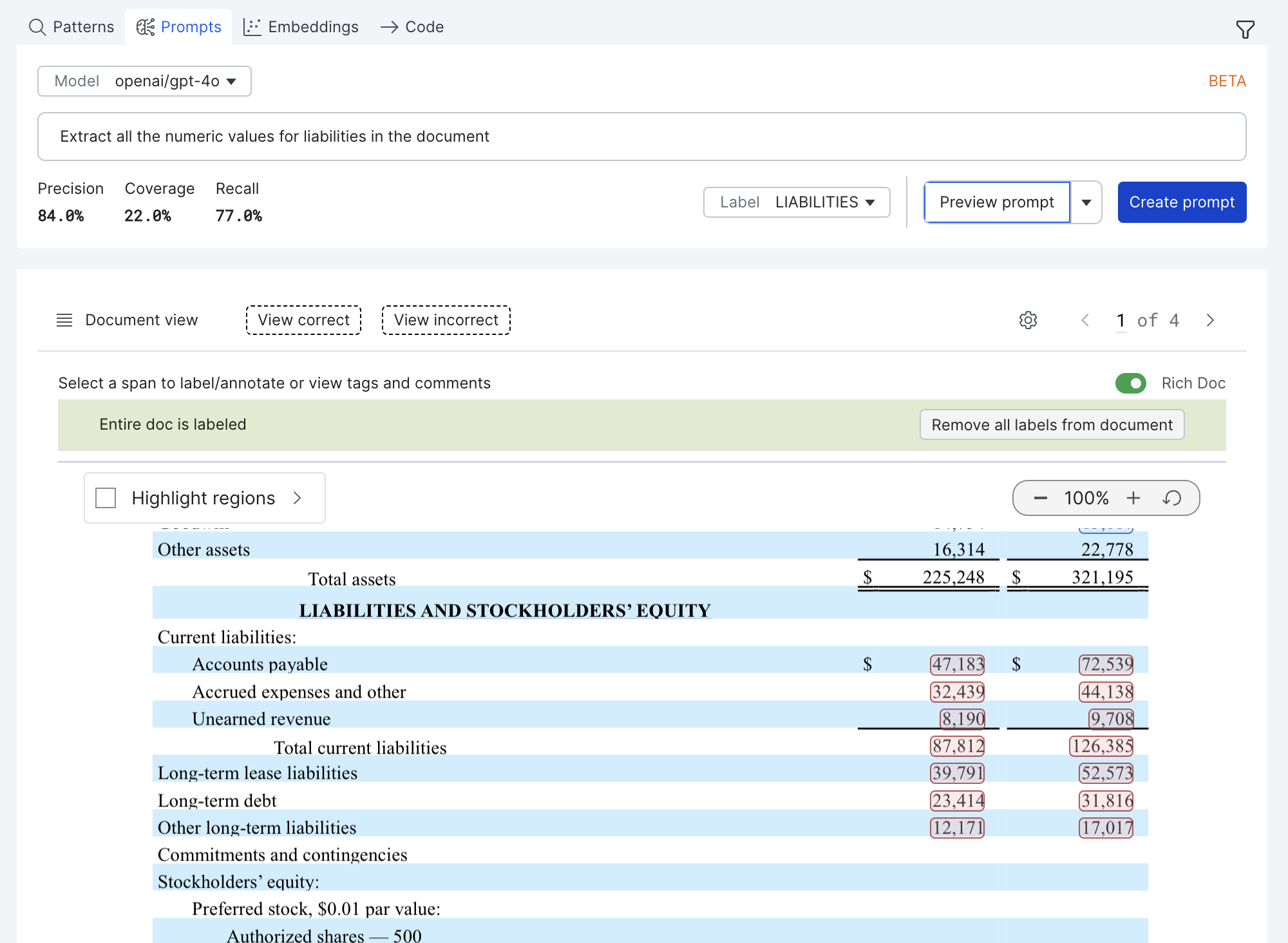

This workflow is also available for PDF extraction applications utilizing extractive visual question answering models, such as LayoutLM, as well as generative text2text models.

The prompting workflow can be accessed through the 'Prompts' tab in Studio for PDF Applications

Select the desired model to run the prompt against. All the Text2Text models configured in the FM Management UI should be available for selection.

Select the label in the drop down, the label should correspond to the entity to be extracted from the PDF documents.

Write a freeform prompt to extract that entity from the PDF documents, the prompt will be run against PDF document. Preview the results by clicking on the 'Preview LF' button. Review the extracted spans and the metrics for the Preview Sample.

Save the LF and run it on the entire dataset by clicking the Create LF button.

Advanced Features

Thresholding model confidence

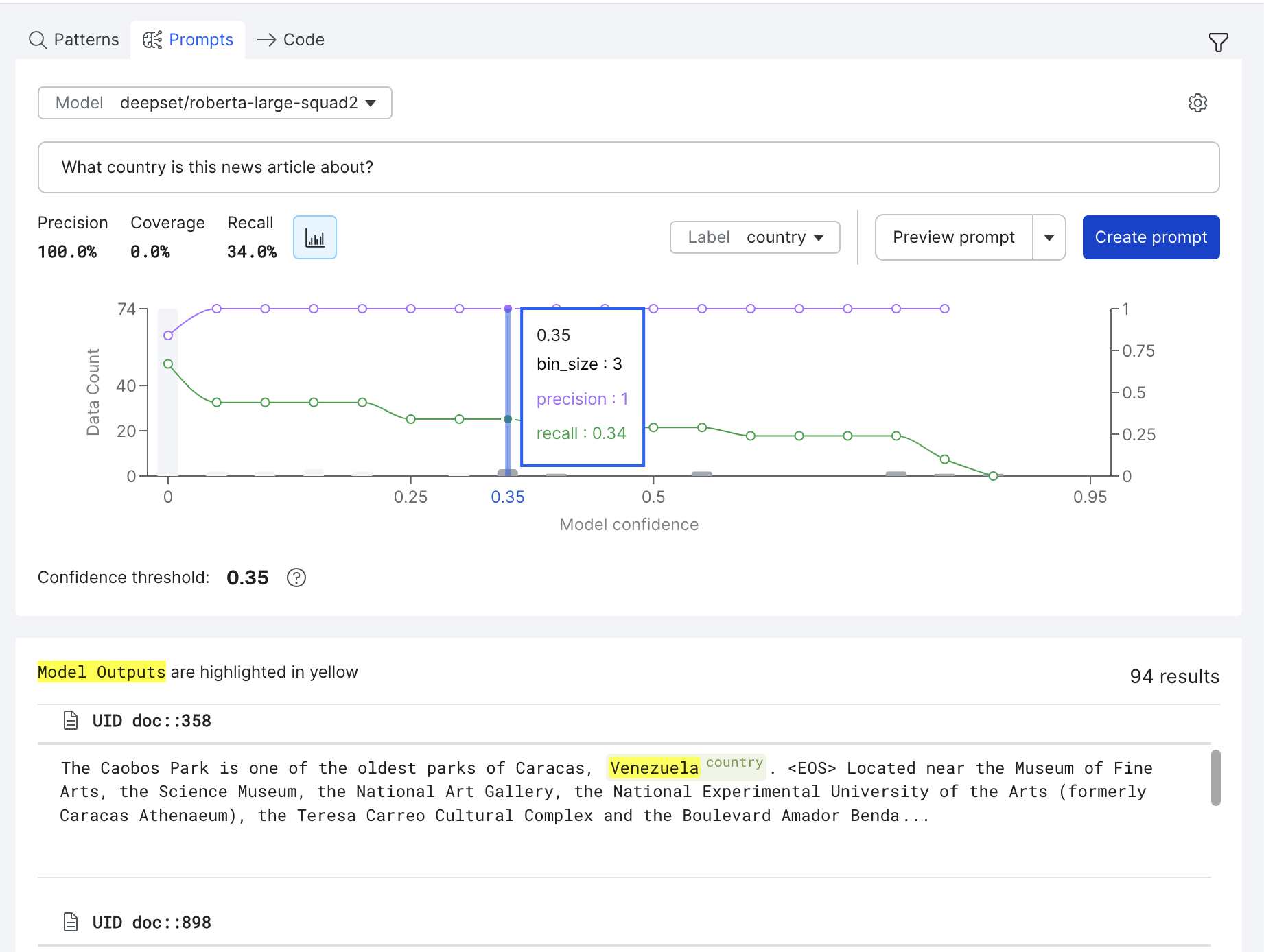

Model confidence is a number between 0 and 1 for each datapoint/row that indicates the foundation model's certainty that its prediction is correct. Extractive models provide a confidence score with each prediction. In some cases, thresholding this confidence provides a useful tradeoff between precision and recall. To visualize this, click the Graph icon to open a threshold metrics view.

In this view, the purple line (above) shows the precision of the LF at different thresholds, while the green line (below) shows the recall of the LF at different thresholds. Additionally, histogram bars represent the number of examples that fall into each threshold bucket.

You can control the threshold used by selecting different operating points on the graph.

Prompting with document chunking (RAG)

Document chunking is a retrieval-augmented generation (RAG) technique that is available within the question answering prompt workflows in sequence tagging applications. This aims to improve prompt results, particularly on longer documents. Leveraging RAG unlocks prompting on long documents that may fall outside of the maximum context length of the foundation model. RAG can also help circumvent the “Lost in the Middle'' phenomenon, where the length of the document in the input context affects the language model's ability to access and utilize information effectively, by only including the most relevant document information in the input context. In addition, RAG can reduce the total inference cost of prompting with language models by reducing the number of input tokens that are used in each prompt.

Follow these steps to enable document chunking with RAG on your application:

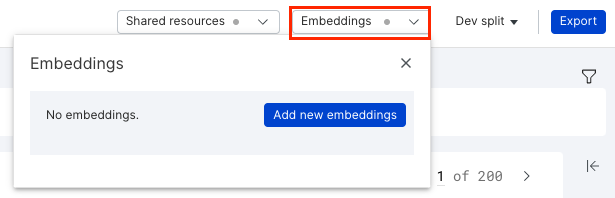

- First, you'll need to compute embeddings for RAG. Click Embeddings in the application data pane (top-right corner of your screen). Then, if no RAG embeddings exist, select Add new embeddings.

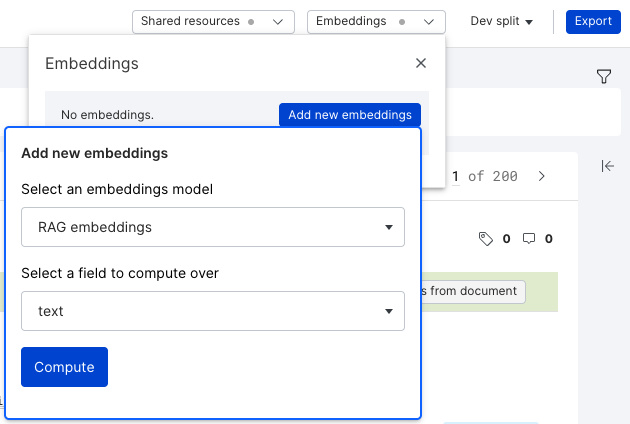

- Once the Add new embeddings modal pops up, select RAG embeddings as the embeddings model to use, and then select the primary text field of the application. This should be the text column that contains the information that you would like to prompt with. Finally, kick off embeddings by selecting Compute, which will then trigger a success notification that these are computing.

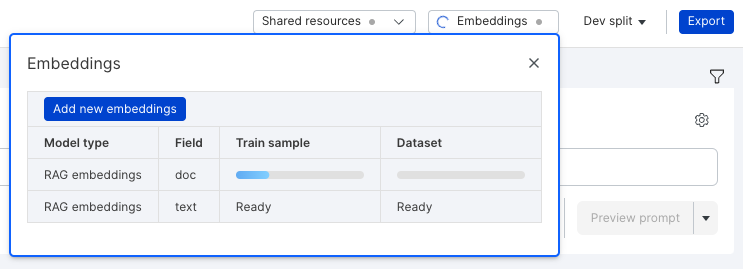

- The time to generate RAG embeddings depends on the size and composition of the dataset. View the progress of the embeddings by hovering over the Train sample and Dataset columns in the embedding home.

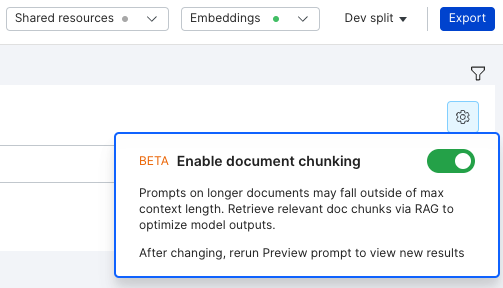

- Once RAG embeddings have been generated, document chunking can be enabled by clicking the settings icon to the right of the prompt toolbar. Simply toggle document chunking on and then click Preview prompt.

- After clicking Preview prompt with document chunking enabled, you can observe the previewed results stream back in real time.

- Finally, click Create prompt to create a prompt labeling function for use in the broader Snorkel data-centric workflow.