Snorkel Flow architecture overview

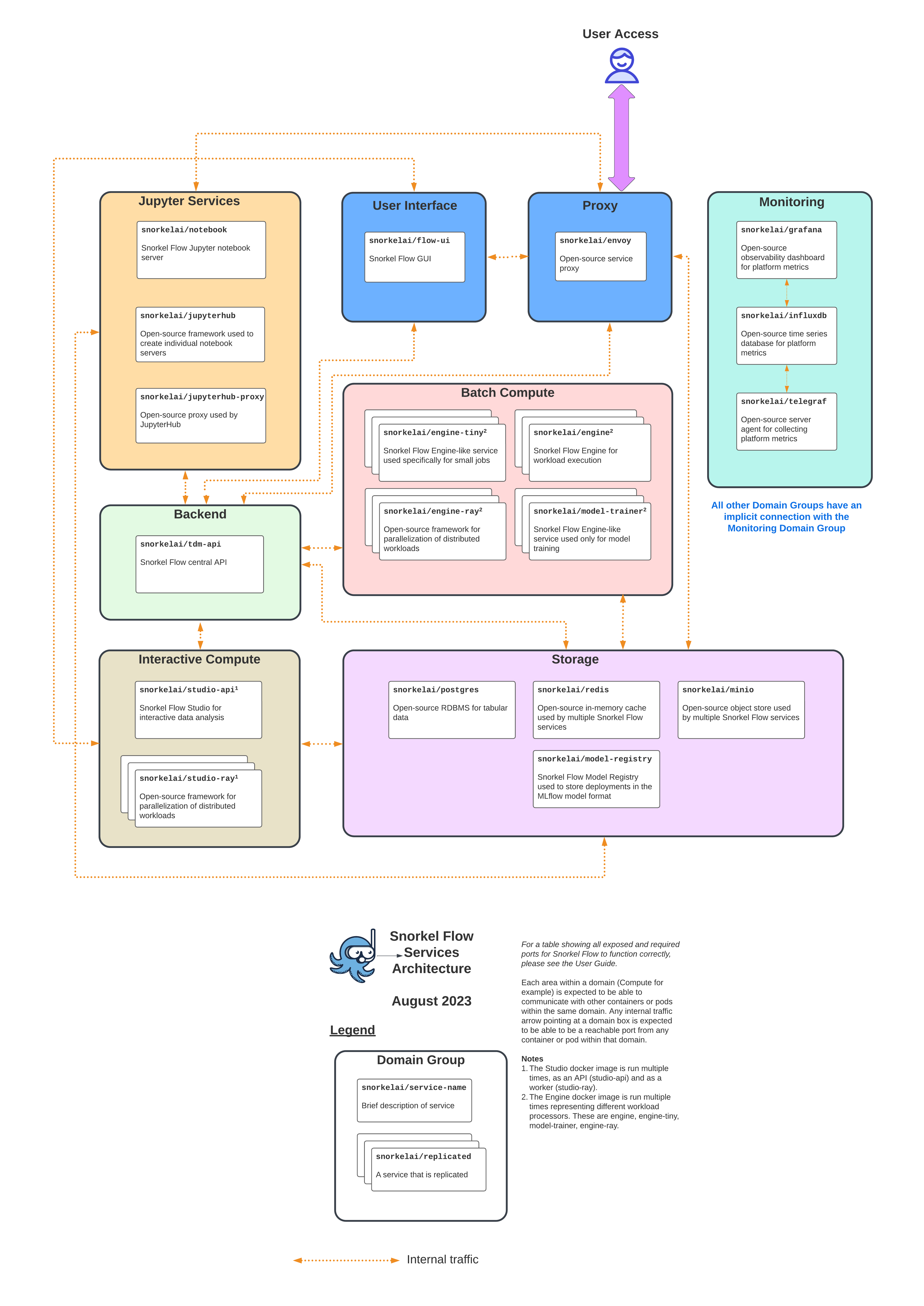

Snorkel Flow architecture comprises of several building blocks working in conjunction with each other as described in the illustration below.

From a foundation level, the platform is an aggregation of the following services:

From a foundation level, the platform is an aggregation of the following services:

1. Jupyter Services

This is made up of the open source framework that is used to create individual notebook servers along with the open source proxy used by Jupyterhub.

2. User Interface

This is the Snorkel Flow Graphical User interface, where users can perform data labeling, data management, and annotation workflows within our on-platform UI.

3. Proxy

This is the open source service proxy that is the main entry point into the platform.

4. Batch Compute

This section comprises of the engine services for small jobs, workload execution, parallelization of distributed workload and model training.

5. Backend Services

This is made up of the tdm-api, which forms the backbone of Snorkel Flow central API services.

6. Storage Services

This layer is made up of Postgres that provides an open source RDBMS for tabular data, Redis open source in-memory cache, open source MinIO object storage and the Snorkel Flow Model Registry that is used to store MLFlow deployments.

7. Interactive Compute

This part of the platform is represented by studio-api that provides interactive data analysis and studio-ray which is the open source framework for the parallelization of distributed workloads.

8. Monitoring

This section provides telemetry and observability into the platform is is made up of Grafana, InfluxDB and Telegraf that provide an observability dashboard for platform metrics, a time series database and the collection of platform metrics respectively.

Implementation context

The Snorkel Flow platform is designed to be deployed on Kubernetes environments that are hosted on AWS (EKS), Azure (AKS) and GCP (GKE), as well as with platforms running on private cloud environments. For details on deployment options that are a good fit for you, please contact a Snorkel AI representative for discussing the choices that are available.